Hi, what syntax to do keep 1 full backup and add new files every 7 days?

Hi, what syntax to do keep 1 full backup and add new files every 7 days?

Welcome to the forum @Tulips

Can you clarify what you’re after? Each backup is effectively a full backup, and new files are backed up.

Scheduled backups are on screen 4 called Schedule. How long to keep those is on screen 5 Options.

You have pretty good control over retention, but obviously you can’t retain a backup that wasn’t taken…

If this uses Duplicati.CommandLine.exe then scheduling and retention is more complicated to manage.

EDIT:

If the original thought was one backup per week, and only one kept, it can be done, but why so limited?

Keeping multiple versions is quite space-efficient in Duplicati because most of the data gets used over.

Along with ts678, you could backup every day or hourly. If there are files you don’t want backed up that might be in the folders to be backed up then you can ignore a designated folder and drag and drop files not to be backed up to it.

Duplicati won’t re-backup the same files unless they change in some way because there’s no need to. It would be a complete waste to do so

The biggest thing is to simply be sure Duplicati is running the backups.

Ok, thanks  I did a backup now, 13TB (took 2 day(s) and 20:38:58) so it had been bad to reupload it.

I did a backup now, 13TB (took 2 day(s) and 20:38:58) so it had been bad to reupload it.

Stay safe

Yep, thanks

That’s a big size (larger than a normal computer drive), read speed, and upload speed (300 Mbit/sec).

Assuming the size is right, I’m also surprised the Duplicati database didn’t get slow under block loads.

Default blocksize is 100 KiB, so it’s tracking 130 million blocks, which is maybe 100 times my advice, especially if you ever have to Recreate the database.or run Direct restore from backup files someday.

Choosing sizes in Duplicati talks more about this. Unfortunately, blocksize change needs re-upload…

I have a 6.7T backup that runs three times a week and adds 8-30G per run. It’s taking about an hour on the high end

That’s because it’s a small change. @Tulips wasn’t clear, but “it had been bad to reupload it” sounds like the initial backup. That comment came 4 hours after mine, so there wouldn’t have been a 68 hour backup. Time also seems long for doing backup of just the file changes, though it does depend on size and speed.

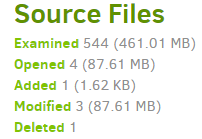

Maybe we’ll get some clarification. The job log shows a very visible high level summary of source change:

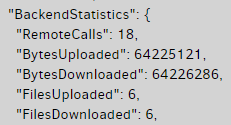

but you have to look in Complete log to see backend statistics that might better reflect any Internet delay:

13TB whew

Hopefully you used good Duplicati settings like ts678 said. It might pay to redo it if there’s a faster setting value.

You risk a lot. It would be a good idea to have an alternate backup solution also which would be another 13TB unfortunately as Duplicati might not let you restore if the db gets broken. Maybe a good idea to check that out if you can gain access to the files without Duplicati in the picture should the db break and repair fail.