I recently moved my duplicate insctance from my Qnap NAS to docker on the same NAS.

The old duplicati instance was running directly on the Qnap OS. Version was 2.0.8.3.

In docker I installed 2.1.0.3, mounted the NAS directories as read-only to docker, moved the old databases into docker, restored my configs and corrected database pathes.

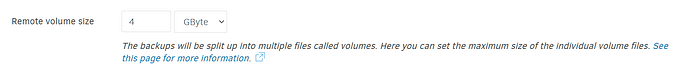

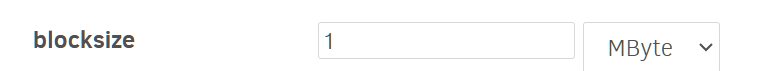

I have several confiigs and all went well, except the really large ones (4TB+). They fail now constantly with “stream was too long”.

They worked before with no problem. I did a last backup before migarting to docker.

I already tried a database repair which was successfull, but did not help with my error.

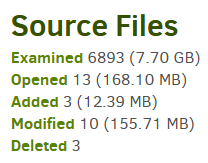

What I see while running the backup:

- It is really slow on checking files. Even so the files are utouched, I only mounted the old directories to docker as mentioned, it seems duplicati is treating every file as unscanned. I set the option “check-filetime-only” which did not help.

- Duplicatie tries to upload new archives, which includes really new files, but fails.

I am archiving to Jottacloud with infiinte storage. So space souldn’t be a problem.

{

"DeletedFiles": 25934,

"DeletedFolders": 1477,

"ModifiedFiles": 0,

"ExaminedFiles": 7164,

"OpenedFiles": 7162,

"AddedFiles": 7162,

"SizeOfModifiedFiles": 0,

"SizeOfAddedFiles": 2647466055772,

"SizeOfExaminedFiles": 2648812252876,

"SizeOfOpenedFiles": 2647466055772,

"NotProcessedFiles": 0,

"AddedFolders": 712,

"TooLargeFiles": 0,

"FilesWithError": 0,

"ModifiedFolders": 0,

"ModifiedSymlinks": 0,

"AddedSymlinks": 20,

"DeletedSymlinks": 934,

"PartialBackup": false,

"Dryrun": false,

"MainOperation": "Backup",

"CompactResults": null,

"VacuumResults": null,

"DeleteResults": null,

"RepairResults": null,

"TestResults": null,

"ParsedResult": "Fatal",

"Interrupted": false,

"Version": "2.1.0.103 (2.1.0.103_canary_2024-12-21)",

"EndTime": "2025-01-04T17:54:38.7432575Z",

"BeginTime": "2025-01-04T08:29:23.1736362Z",

"Duration": "09:25:15.5696213",

"MessagesActualLength": 86,

"WarningsActualLength": 0,

"ErrorsActualLength": 2,

"Messages": [

"2025-01-04 09:29:23 +01 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: Die Operation Backup wurde gestartet",

"2025-01-04 09:30:13 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()",

"2025-01-04 09:30:15 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (4,182 KB)",

"2025-01-04 13:53:09 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b2b948135458c45bdad7a515893a2997f.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:54:09 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Retrying: duplicati-b2b948135458c45bdad7a515893a2997f.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:54:20 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-b2b948135458c45bdad7a515893a2997f.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:54:20 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-bf0af55715310472ba96e43b994ce57b6.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:54:20 +01 - [Information-Duplicati.Library.Main.Operation.Backup.BackendUploader-RenameRemoteTargetFile]: Renaming \"duplicati-b2b948135458c45bdad7a515893a2997f.dblock.zip.aes\" to \"duplicati-bf0af55715310472ba96e43b994ce57b6.dblock.zip.aes\"",

"2025-01-04 13:54:20 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-bf0af55715310472ba96e43b994ce57b6.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:55:48 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Retrying: duplicati-bf0af55715310472ba96e43b994ce57b6.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:55:59 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-bf0af55715310472ba96e43b994ce57b6.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:55:59 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-bd08ddd62d784409297ffb44e5dbad26a.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:55:59 +01 - [Information-Duplicati.Library.Main.Operation.Backup.BackendUploader-RenameRemoteTargetFile]: Renaming \"duplicati-bf0af55715310472ba96e43b994ce57b6.dblock.zip.aes\" to \"duplicati-bd08ddd62d784409297ffb44e5dbad26a.dblock.zip.aes\"",

"2025-01-04 13:55:59 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-bd08ddd62d784409297ffb44e5dbad26a.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:56:55 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Retrying: duplicati-bd08ddd62d784409297ffb44e5dbad26a.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:57:06 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-bd08ddd62d784409297ffb44e5dbad26a.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:57:06 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Rename: duplicati-b042e2b308842401b8bc2f101f8f0afea.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:57:06 +01 - [Information-Duplicati.Library.Main.Operation.Backup.BackendUploader-RenameRemoteTargetFile]: Renaming \"duplicati-bd08ddd62d784409297ffb44e5dbad26a.dblock.zip.aes\" to \"duplicati-b042e2b308842401b8bc2f101f8f0afea.dblock.zip.aes\"",

"2025-01-04 13:57:06 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Started: duplicati-b042e2b308842401b8bc2f101f8f0afea.dblock.zip.aes (3,999 GB)",

"2025-01-04 13:58:10 +01 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Put - Retrying: duplicati-b042e2b308842401b8bc2f101f8f0afea.dblock.zip.aes (3,999 GB)"

],

"Warnings": [],

"Errors": [

"2025-01-04 18:54:38 +01 - [Error-Duplicati.Library.Main.Operation.BackupHandler-FatalError]: Fatal error\nAggregateException: One or more errors occurred. (Stream was too long.) (Stream was too long.) (Stream was too long.)",

"2025-01-04 18:54:38 +01 - [Error-Duplicati.Library.Main.Controller-FailedOperation]: Die Operation Backup ist mit folgenden Fehler fehlgeschlagen: One or more errors occurred. (Stream was too long.) (Stream was too long.) (Stream was too long.) (One or more errors occurred. (Stream was too long.) (Stream was too long.) (Stream was too long.)) (One or more errors occurred. (One or more errors occurred. (Stream was too long.) (Stream was too long.) (Stream was too long.)))\nAggregateException: One or more errors occurred. (Stream was too long.) (Stream was too long.) (Stream was too long.) (One or more errors occurred. (Stream was too long.) (Stream was too long.) (Stream was too long.)) (One or more errors occurred. (One or more errors occurred. (Stream was too long.) (Stream was too long.) (Stream was too long.)))"

],

"TaskControl": {

"ProgressToken": {

"IsCancellationRequested": false,

"CanBeCanceled": true,

"WaitHandle": {

"Handle": {

"value": 2984

},

"SafeWaitHandle": {

"IsInvalid": false,

"IsClosed": false

}

}

},

"TransferToken": {

"IsCancellationRequested": false,

"CanBeCanceled": true,

"WaitHandle": {

"Handle": {

"value": 5584

},

"SafeWaitHandle": {

"IsInvalid": false,

"IsClosed": false

}

}

}

},

"BackendStatistics": {

"RemoteCalls": 20,

"BytesUploaded": 0,

"BytesDownloaded": 0,

"FilesUploaded": 0,

"FilesDownloaded": 0,

"FilesDeleted": 0,

"FoldersCreated": 0,

"RetryAttempts": 16,

"UnknownFileSize": 0,

"UnknownFileCount": 0,

"KnownFileCount": 4282,

"KnownFileSize": 8920750402322,

"LastBackupDate": "2024-12-25T12:09:25+01:00",

"BackupListCount": 12,

"TotalQuotaSpace": 0,

"FreeQuotaSpace": 0,

"AssignedQuotaSpace": -1,

"ReportedQuotaError": false,

"ReportedQuotaWarning": false,

"MainOperation": "Backup",

"ParsedResult": "Success",

"Interrupted": false,

"Version": "2.1.0.103 (2.1.0.103_canary_2024-12-21)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2025-01-04T08:29:23.1736389Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null,

"TaskControl": {

"ProgressToken": {

"IsCancellationRequested": false,

"CanBeCanceled": true,

"WaitHandle": {

"Handle": {

"value": 2984

},

"SafeWaitHandle": {

"IsInvalid": false,

"IsClosed": false

}

}

},

"TransferToken": {

"IsCancellationRequested": false,

"CanBeCanceled": true,

"WaitHandle": {

"Handle": {

"value": 5584

},

"SafeWaitHandle": {

"IsInvalid": false,

"IsClosed": false

}

}

}

}

}

}