For context, I am running duplicati in a docker container on TrueNAS with /mnt:/mnt passed as a docker volume. TrueNAS supports ZFS. Until now I have been running duplicati on the zfs datasets directly. There is the obvious problem that files can change during the back. I’ve finally bit the bullet and written some bash scripts that creates a snapshot of the datastore, mounts it to a different directory and runs the backup job (from the GUI). That worked great the first time. I ran the backup job again (without doing another snapshot) and that worked great also. Then I umounted the old snapshot, deleted the snapshot, created a new snapshot and mounted it back in the same place as before. Now when I run the backup job it seems to think that all of the files have changed and it backs them up all over again. AFAICT the files in the snapshot have not changed from one snapshot to the next (very few changes were made on the dataset between tests). Why would this happen? Is there any option I can use within the job or duplicati to make this work?

When scanning the source files, Duplicati will check each file against the previous last-modified timestamp on the file. If this has not changed, Duplicati assumes the file has not changed skips the file. If the file is new or the timestamp has changed, it will scan the file for new blocks that have not been seen before.

The timestamp check is very fast compared to the file scanning check.

My guess is that the recreate of the snapshot either modifies the timestamps or changes the path (e.g., by inserting a snapshot id into the path). Either of those will make the file skip the fast check and go to the scanning.

If the timestamps are changed there is not much you can do.

If the paths are changed, you can perhaps symlink the root folder, after the snapshot-id, so the path is stable as far as Duplicati is concerned.

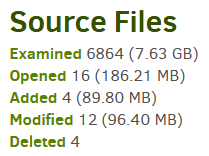

What does the job log’s Source summary show, e.g.

On the job log screen, does Complete log show a sufficient upload, e.g. from my sample job:

“BytesUploaded”: 129552502,

You can compare the second to the first backup. The worst I’d expect is a small upload for the limited changes that you mention, however there could be a lot of reading, i.e. Opened in stats.

For a closer look into why something was chosen for a closer exam, you can view verbose log.

2024-08-01 12:32:12 -04 - [Verbose-Duplicati.Library.Main.Operation.Backup.FilePreFilterProcess.FileEntry-CheckFileForChanges]: Checking file for changes C:\PortableApps\Notepad++Portable\App\Notepad++64\backup\webpages.txt@2024-07-26_195932, new: False, timestamp changed: True, size changed: True, metadatachanged: True, 8/1/2024 1:00:14 AM vs 7/29/2024 9:18:16 PM

You can sneak a peak with About → Show log → Live → Verbose during backup, or make a file.

log-file=<path> log-file-log-level=verbose will do, but the log will get large if you have lots of files.

Thank you both for your replies. I ran the backup again last night and it took 5 hours (instead of 2 days), so that was a great improvement but not what I was expecting. I turned on the verbose logging and ran it again this morning. It now takes 8 minutes! I have no idea whey the second run took 5 hours (since it is unlikely that anything changed), but I’m very happy for the current result. Sorry for crying wolf - I guess I should have just let the first backup run but I was scared that it would take another 2 days to upload 500G