Docker commands works with following compose file. But still have two issues.

- It shows lot of error in the log. But my scripts seems to be running fine before and after backup as expected.(at least I couldn’t find any issues) apart from lot of error in the portainer.

- Before and After scripts are running even when I try to restore. Anyway to disable it and use them only for backup not for restore. Because they stop everything include NGINX and Adguard DNS home so I lose connection to Duplicati as well.

services:

duplicati:

image: lscr.io/linuxserver/duplicati:latest

container_name: duplicati

environment:

- DOCKER_MODS=linuxserver/mods:universal-docker-in-docker

- PUID=0

- PGID=0

- TZ=Etc/UTC

- SETTINGS_ENCRYPTION_KEY=SparkyDuplicati123

#- CLI_ARGS= #optional

privileged: true

volumes:

- /home/sparky/SparkyApps/duplicati/config:/config

- /mnt/crucial/duplicati/tmp:/tmp

- /mnt/crucial/backups:/backups

- /mnt/:/source

- /home/sparky/SparkyApps/:/SparkyApps

- /var/run/docker.sock:/var/run/docker.sock # Add this line to mount Docker socket

ports:

- 8200:8200

restart: unless-stopped

#Backup commands for Daily

#–run-script-before=/SparkyApps/scripts/duplicati_scripts/pre_daily_backup.sh

#–run-script-after=/SparkyApps/scripts/duplicati_scripts/post_daily_backup.sh

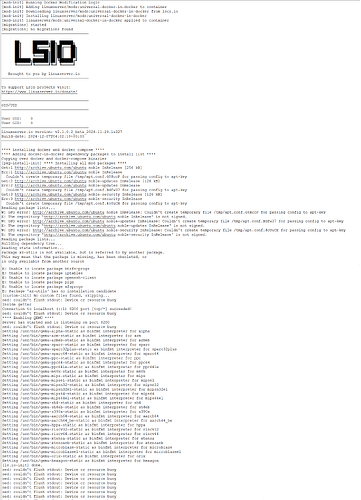

Error from Portainer log:

is only available from another source

60

61

E: Unable to locate package btrfs-progs

62

E: Unable to locate package iptables

63

E: Unable to locate package openssh-client

64

E: Unable to locate package pigz

65

E: Unable to locate package xfsprogs

66

E: Package ‘xz-utils’ has no installation candidate

67

[custom-init] No custom files found, skipping…

68

sed: couldn’t flush stdout: Device or resource busy

69

Inside getter

70

sed: couldn’t flush stdout: Device or resource busy

71

**** Enabling QEMU ****

72

Connection to localhost (::1) 8200 port [tcp/*] succeeded!

73

Server has started and is listening on port 8200

74

sed: couldn’t flush stdout: Device or resource busy

75

Setting /usr/bin/qemu-alpha-static as binfmt interpreter for alpha

76

Setting /usr/bin/qemu-arm-static as binfmt interpreter for arm

77

Setting /usr/bin/qemu-armeb-static as binfmt interpreter for armeb

78

Setting /usr/bin/qemu-sparc-static as binfmt interpreter for sparc

79

Setting /usr/bin/qemu-sparc32plus-static as binfmt interpreter for sparc32plus

80

Setting /usr/bin/qemu-sparc64-static as binfmt interpreter for sparc64

81

Setting /usr/bin/qemu-ppc-static as binfmt interpreter for ppc

82

Setting /usr/bin/qemu-ppc64-static as binfmt interpreter for ppc64

83

Setting /usr/bin/qemu-ppc64le-static as binfmt interpreter for ppc64le

84

Setting /usr/bin/qemu-m68k-static as binfmt interpreter for m68k

85

Setting /usr/bin/qemu-mips-static as binfmt interpreter for mips

86

Setting /usr/bin/qemu-mipsel-static as binfmt interpreter for mipsel

87

Setting /usr/bin/qemu-mipsn32-static as binfmt interpreter for mipsn32

88

Setting /usr/bin/qemu-mipsn32el-static as binfmt interpreter for mipsn32el

89

Setting /usr/bin/qemu-mips64-static as binfmt interpreter for mips64

90

Setting /usr/bin/qemu-mips64el-static as binfmt interpreter for mips64el

91

Setting /usr/bin/qemu-sh4-static as binfmt interpreter for sh4

92

Setting /usr/bin/qemu-sh4eb-static as binfmt interpreter for sh4eb

93

Setting /usr/bin/qemu-s390x-static as binfmt interpreter for s390x

94

Setting /usr/bin/qemu-aarch64-static as binfmt interpreter for aarch