It has now gone through Completing Backup, then to Uploading Files, and shows it last successfully ran just now, but also error’d out with:

“Errors affected 1 file(s)” and the following showing up:

DeletedFiles: 0

DeletedFolders: 0

ModifiedFiles: 0

ExaminedFiles: 209612

OpenedFiles: 33624

AddedFiles: 33564

SizeOfModifiedFiles: 0

SizeOfAddedFiles: 65742581349

SizeOfExaminedFiles: 307157689882

SizeOfOpenedFiles: 65999910890

NotProcessedFiles: 0

AddedFolders: 8500

TooLargeFiles: 0

FilesWithError: 1

ModifiedFolders: 0

ModifiedSymlinks: 0

AddedSymlinks: 0

DeletedSymlinks: 0

PartialBackup: False

Dryrun: False

MainOperation: Backup

CompactResults: null

DeleteResults:

DeletedSets: []

Dryrun: False

MainOperation: Delete

CompactResults: null

ParsedResult: Success

EndTime: 6/20/2018 3:31:20 PM (1529523080)

BeginTime: 6/20/2018 3:29:50 PM (1529522990)

Duration: 00:01:29.1112650

BackendStatistics:

RemoteCalls: 13

BytesUploaded: 264896990

BytesDownloaded: 151737767

FilesUploaded: 6

FilesDownloaded: 3

FilesDeleted: 2

FoldersCreated: 0

RetryAttempts: 0

UnknownFileSize: 0

UnknownFileCount: 0

KnownFileCount: 4470

KnownFileSize: 299973245278

LastBackupDate: 6/20/2018 1:02:36 PM (1529514156)

BackupListCount: 2

TotalQuotaSpace: 0

FreeQuotaSpace: 0

AssignedQuotaSpace: -1

ReportedQuotaError: False

ReportedQuotaWarning: False

ParsedResult: Success

RepairResults: null

TestResults:

MainOperation: Test

Verifications: [

Key: duplicati-20180601T025026Z.dlist.zip.aes

Value: [],

Key: duplicati-if9d02157e9f949c5a6f6da0e6fc070c5.dindex.zip.aes

Value: [],

Key: duplicati-b366a99eafbdb475fa8075cce731f8550.dblock.zip.aes

Value: []

]

ParsedResult: Success

EndTime: 6/20/2018 3:33:19 PM (1529523199)

BeginTime: 6/20/2018 3:31:24 PM (1529523084)

Duration: 00:01:54.3361770

ParsedResult: Error

EndTime: 6/20/2018 3:33:19 PM (1529523199)

BeginTime: 6/20/2018 1:02:36 PM (1529514156)

Duration: 02:30:42.8146500

Messages: [

scheduling missing file for deletion, currently listed as Uploading: duplicati-20180615T231848Z.dlist.zip.aes,

promoting uploaded complete file from Uploading to Uploaded: duplicati-b4e96f9607cd143edb865c90c067e12a0.dblock.zip.aes,

removing incomplete remote file listed as Uploading: duplicati-ie3a35d419be946388ed273f03af986c7.dindex.zip.aes,

removing incomplete remote file listed as Uploading: duplicati-bcdc47fda6d1549f2943bf0e58e02e2ec.dblock.zip.aes,

scheduling missing file for deletion, currently listed as Uploading: duplicati-i096555c1f1304f3cb2486e5669142d91.dindex.zip.aes,

...

]

Warnings: [

Expected there to be a temporary fileset for synthetic filelist (3, duplicati-i58f716de3b97452ca0eab64aecfae66c.dindex.zip.aes), but none was found?,

Failed to process path: /Volumes/MediaHD1/IncomingPhotos/Norway2016.photoslibrary/Masters/2016/07/22/20160722-123248/IMG_1834.MOV => Abort due to constraint violation

UNIQUE constraint failed: BlocklistHash.BlocksetID, BlocklistHash.Index

]

Errors: []

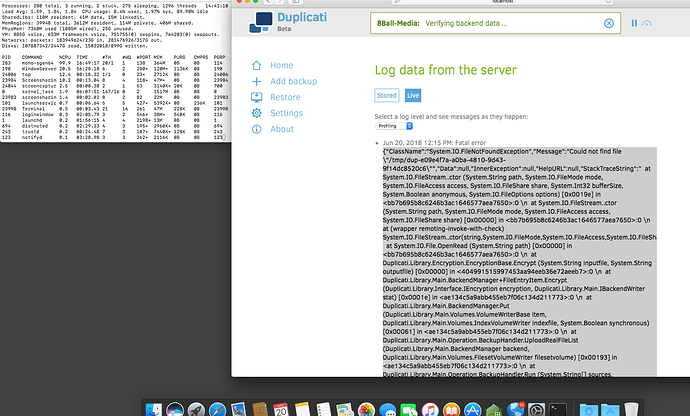

Here’s the live logs from Profiling setting during the “Completing Backup” and on time frame mentioned above:

Jun 20, 2018 3:33 PM: Running Backup took 02:30:42.873

Jun 20, 2018 3:33 PM: ExecuteNonQuery: DELETE FROM "RemoteOperation" WHERE "Timestamp" < ? took 00:00:00.065

Jun 20, 2018 3:33 PM: Starting - ExecuteNonQuery: DELETE FROM "RemoteOperation" WHERE "Timestamp" < ?

Jun 20, 2018 3:33 PM: ExecuteNonQuery: DELETE FROM "LogData" WHERE "Timestamp" < ? took 00:00:00.000

Jun 20, 2018 3:33 PM: Starting - ExecuteNonQuery: DELETE FROM "LogData" WHERE "Timestamp" < ?

Jun 20, 2018 3:33 PM: ExecuteNonQuery: UPDATE "RemoteVolume" SET "VerificationCount" = MAX(1, CASE WHEN "VerificationCount" <= 0 THEN (SELECT MAX("VerificationCount") FROM "RemoteVolume") ELSE "VerificationCount" + 1 END) WHERE "Name" = ? took 00:00:00.005

Jun 20, 2018 3:33 PM: Starting - ExecuteNonQuery: UPDATE "RemoteVolume" SET "VerificationCount" = MAX(1, CASE WHEN "VerificationCount" <= 0 THEN (SELECT MAX("VerificationCount") FROM "RemoteVolume") ELSE "VerificationCount" + 1 END) WHERE "Name" = ?

Jun 20, 2018 3:33 PM: RemoteOperationGet took 00:01:39.980

Jun 20, 2018 3:33 PM: Backend event: Get - Completed: duplicati-b366a99eafbdb475fa8075cce731f8550.dblock.zip.aes (127.97 MB)

Jun 20, 2018 3:33 PM: Downloaded 127.97 MB in 00:01:39.9518450, 1.28 MB/s

Jun 20, 2018 3:31 PM: Backend event: Get - Started: duplicati-b366a99eafbdb475fa8075cce731f8550.dblock.zip.aes (127.97 MB)

Jun 20, 2018 3:31 PM: Starting - RemoteOperationGet

Jun 20, 2018 3:31 PM: ExecuteNonQuery: UPDATE "RemoteVolume" SET "VerificationCount" = MAX(1, CASE WHEN "VerificationCount" <= 0 THEN (SELECT MAX("VerificationCount") FROM "RemoteVolume") ELSE "VerificationCount" + 1 END) WHERE "Name" = ? took 00:00:00.004

Jun 20, 2018 3:31 PM: Starting - ExecuteNonQuery: UPDATE "RemoteVolume" SET "VerificationCount" = MAX(1, CASE WHEN "VerificationCount" <= 0 THEN (SELECT MAX("VerificationCount") FROM "RemoteVolume") ELSE "VerificationCount" + 1 END) WHERE "Name" = ?

Jun 20, 2018 3:31 PM: RemoteOperationGet took 00:00:00.311

Jun 20, 2018 3:31 PM: Backend event: Get - Completed: duplicati-if9d02157e9f949c5a6f6da0e6fc070c5.dindex.zip.aes (93.25 KB)

Jun 20, 2018 3:31 PM: Downloaded 93.25 KB in 00:00:00.3104710, 300.34 KB/s

Jun 20, 2018 3:31 PM: Backend event: Get - Started: duplicati-if9d02157e9f949c5a6f6da0e6fc070c5.dindex.zip.aes (93.25 KB)

Jun 20, 2018 3:31 PM: Starting - RemoteOperationGet

Jun 20, 2018 3:31 PM: ExecuteNonQuery: UPDATE "RemoteVolume" SET "VerificationCount" = MAX(1, CASE WHEN "VerificationCount" <= 0 THEN (SELECT MAX("VerificationCount") FROM "RemoteVolume") ELSE "VerificationCount" + 1 END) WHERE "Name" = ? took 00:00:00.003

Jun 20, 2018 3:31 PM: Starting - ExecuteNonQuery: UPDATE "RemoteVolume" SET "VerificationCount" = MAX(1, CASE WHEN "VerificationCount" <= 0 THEN (SELECT MAX("VerificationCount") FROM "RemoteVolume") ELSE "VerificationCount" + 1 END) WHERE "Name" = ?

Jun 20, 2018 3:31 PM: RemoteOperationGet took 00:00:13.990

Jun 20, 2018 3:31 PM: Backend event: Get - Completed: duplicati-20180601T025026Z.dlist.zip.aes (16.64 MB)

Jun 20, 2018 3:31 PM: Downloaded 16.64 MB in 00:00:13.9647530, 1.19 MB/s

Jun 20, 2018 3:31 PM: Backend event: Get - Started: duplicati-20180601T025026Z.dlist.zip.aes (16.64 MB)

Jun 20, 2018 3:31 PM: Starting - RemoteOperationGet

Jun 20, 2018 3:31 PM: ExecuteReader: SELECT "ID", "Name", "Size", "Hash", "VerificationCount" FROM "Remotevolume" WHERE "Type" = ? AND "State" IN (?, ?) took 00:00:00.000

Jun 20, 2018 3:31 PM: Starting - ExecuteReader: SELECT "ID", "Name", "Size", "Hash", "VerificationCount" FROM "Remotevolume" WHERE "Type" = ? AND "State" IN (?, ?)

Jun 20, 2018 3:31 PM: ExecuteReader: SELECT "ID", "Name", "Size", "Hash", "VerificationCount" FROM "Remotevolume" WHERE "Type" = ? AND "State" IN (?, ?) took 00:00:00.000

Jun 20, 2018 3:31 PM: Starting - ExecuteReader: SELECT "ID", "Name", "Size", "Hash", "VerificationCount" FROM "Remotevolume" WHERE "Type" = ? AND "State" IN (?, ?)

Jun 20, 2018 3:31 PM: ExecuteReader: SELECT "A"."VolumeID", "A"."Name", "A"."Size", "A"."Hash", "A"."VerificationCount" FROM (SELECT "ID" AS "VolumeID", "Name", "Size", "Hash", "VerificationCount" FROM "Remotevolume" WHERE "State" IN (?, ?)) A, "Fileset" WHERE "A"."VolumeID" = "Fileset"."VolumeID" ORDER BY "Fileset"."Timestamp" took 00:00:00.000

Jun 20, 2018 3:31 PM: Starting - ExecuteReader: SELECT "A"."VolumeID", "A"."Name", "A"."Size", "A"."Hash", "A"."VerificationCount" FROM (SELECT "ID" AS "VolumeID", "Name", "Size", "Hash", "VerificationCount" FROM "Remotevolume" WHERE "State" IN (?, ?)) A, "Fileset" WHERE "A"."VolumeID" = "Fileset"."VolumeID" ORDER BY "Fileset"."Timestamp"

Jun 20, 2018 3:31 PM: ExecuteScalarInt64: SELECT MAX("VerificationCount") FROM "RemoteVolume" took 00:00:00.001

Jun 20, 2018 3:31 PM: Starting - ExecuteScalarInt64: SELECT MAX("VerificationCount") FROM "RemoteVolume"

Jun 20, 2018 3:31 PM: ExecuteReader: SELECT "ID", "Name", "Size", "Hash", "VerificationCount" FROM "Remotevolume" WHERE ("State" = ? OR "State" = ?) AND ("Hash" = "" OR "Hash" IS NULL OR "Size" <= 0) took 00:00:00.002

Jun 20, 2018 3:31 PM: Starting - ExecuteReader: SELECT "ID", "Name", "Size", "Hash", "VerificationCount" FROM "Remotevolume" WHERE ("State" = ? OR "State" = ?) AND ("Hash" = "" OR "Hash" IS NULL OR "Size" <= 0)

Jun 20, 2018 3:31 PM: ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC took 00:00:00.000

Jun 20, 2018 3:31 PM: Starting - ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC

Jun 20, 2018 3:31 PM: RemoteOperationTerminate took 00:00:00.000

Jun 20, 2018 3:31 PM: Starting - RemoteOperationTerminate

Jun 20, 2018 3:31 PM: AfterBackupVerify took 00:00:04.570

Jun 20, 2018 3:31 PM: ExecuteReader: SELECT DISTINCT "Name", "State" FROM "Remotevolume" WHERE "Name" IN (SELECT "Name" FROM "Remotevolume" WHERE "State" IN ("Deleted", "Deleting")) AND NOT "State" IN ("Deleted", "Deleting") took 00:00:00.003

Jun 20, 2018 3:31 PM: Starting - ExecuteReader: SELECT DISTINCT "Name", "State" FROM "Remotevolume" WHERE "Name" IN (SELECT "Name" FROM "Remotevolume" WHERE "State" IN ("Deleted", "Deleting")) AND NOT "State" IN ("Deleted", "Deleting")

Jun 20, 2018 3:31 PM: RemoteOperationList took 00:00:04.382

Jun 20, 2018 3:31 PM: Backend event: List - Completed: (4.37 KB)

Jun 20, 2018 3:31 PM: Backend event: List - Started: ()

Jun 20, 2018 3:31 PM: Starting - RemoteOperationList

Jun 20, 2018 3:31 PM: Starting - AfterBackupVerify

Jun 20, 2018 3:31 PM: CommitFinalizingBackup took 00:00:00.001

Jun 20, 2018 3:31 PM: Starting - CommitFinalizingBackup

Jun 20, 2018 3:31 PM: Async backend wait took 00:00:00.000

Jun 20, 2018 3:31 PM: RemoteOperationTerminate took 00:00:00.000

Jun 20, 2018 3:31 PM: Starting - RemoteOperationTerminate

Jun 20, 2018 3:31 PM: Starting - Async backend wait

Jun 20, 2018 3:31 PM: No remote filesets were deleted

Jun 20, 2018 3:31 PM: RemoteOperationNothing took 00:00:00.000

Jun 20, 2018 3:31 PM: Starting - RemoteOperationNothing

Jun 20, 2018 3:31 PM: ExecuteReader: SELECT "Name", "Size" FROM "RemoteVolume" WHERE "Type" = ? AND "State" = ? took 00:00:00.003

Jun 20, 2018 3:31 PM: Starting - ExecuteReader: SELECT "Name", "Size" FROM "RemoteVolume" WHERE "Type" = ? AND "State" = ?

Jun 20, 2018 3:31 PM: ExecuteNonQuery: UPDATE "RemoteVolume" SET "State" = ? WHERE "Type" = ? AND "State" IN (?, ?) AND "ID" NOT IN (SELECT "VolumeID" FROM "Fileset") took 00:00:00.025

Jun 20, 2018 3:31 PM: Starting - ExecuteNonQuery: UPDATE "RemoteVolume" SET "State" = ? WHERE "Type" = ? AND "State" IN (?, ?) AND "ID" NOT IN (SELECT "VolumeID" FROM "Fileset")

Jun 20, 2018 3:31 PM: ExecuteNonQuery: DELETE FROM "Block" WHERE "ID" NOT IN (SELECT DISTINCT "BlockID" FROM "BlocksetEntry" UNION SELECT DISTINCT "ID" FROM "Block", "BlocklistHash" WHERE "Block"."Hash" = "BlocklistHash"."Hash") took 00:00:40.508

Jun 20, 2018 3:30 PM: Starting - ExecuteNonQuery: DELETE FROM "Block" WHERE "ID" NOT IN (SELECT DISTINCT "BlockID" FROM "BlocksetEntry" UNION SELECT DISTINCT "ID" FROM "Block", "BlocklistHash" WHERE "Block"."Hash" = "BlocklistHash"."Hash")

Jun 20, 2018 3:30 PM: ExecuteNonQuery: INSERT INTO "DeletedBlock" ("Hash", "Size", "VolumeID") SELECT "Hash", "Size", "VolumeID" FROM "Block" WHERE "ID" NOT IN (SELECT DISTINCT "BlockID" AS "BlockID" FROM "BlocksetEntry" UNION SELECT DISTINCT "ID" FROM "Block", "BlocklistHash" WHERE "Block"."Hash" = "BlocklistHash"."Hash") took 00:00:26.775

Jun 20, 2018 3:30 PM: Starting - ExecuteNonQuery: INSERT INTO "DeletedBlock" ("Hash", "Size", "VolumeID") SELECT "Hash", "Size", "VolumeID" FROM "Block" WHERE "ID" NOT IN (SELECT DISTINCT "BlockID" AS "BlockID" FROM "BlocksetEntry" UNION SELECT DISTINCT "ID" FROM "Block", "BlocklistHash" WHERE "Block"."Hash" = "BlocklistHash"."Hash")

Jun 20, 2018 3:30 PM: ExecuteNonQuery: DELETE FROM "BlocklistHash" WHERE "BlocksetID" NOT IN (SELECT DISTINCT "ID" FROM "Blockset") took 00:00:00.723

Jun 20, 2018 3:29 PM: Starting - ExecuteNonQuery: DELETE FROM "BlocklistHash" WHERE "BlocksetID" NOT IN (SELECT DISTINCT "ID" FROM "Blockset")

Jun 20, 2018 3:29 PM: ExecuteNonQuery: DELETE FROM "BlocksetEntry" WHERE "BlocksetID" NOT IN (SELECT DISTINCT "ID" FROM "Blockset") took 00:00:04.913

Jun 20, 2018 3:29 PM: Starting - ExecuteNonQuery: DELETE FROM "BlocksetEntry" WHERE "BlocksetID" NOT IN (SELECT DISTINCT "ID" FROM "Blockset")

Jun 20, 2018 3:29 PM: ExecuteNonQuery: DELETE FROM "Blockset" WHERE "ID" NOT IN (SELECT DISTINCT "BlocksetID" FROM "File" UNION SELECT DISTINCT "BlocksetID" FROM "Metadataset") took 00:00:01.685

Jun 20, 2018 3:29 PM: Starting - ExecuteNonQuery: DELETE FROM "Blockset" WHERE "ID" NOT IN (SELECT DISTINCT "BlocksetID" FROM "File" UNION SELECT DISTINCT "BlocksetID" FROM "Metadataset")

Jun 20, 2018 3:29 PM: ExecuteNonQuery: DELETE FROM "Metadataset" WHERE "ID" NOT IN (SELECT DISTINCT "MetadataID" FROM "File") took 00:00:00.810

Jun 20, 2018 3:29 PM: Starting - ExecuteNonQuery: DELETE FROM "Metadataset" WHERE "ID" NOT IN (SELECT DISTINCT "MetadataID" FROM "File")

Jun 20, 2018 3:29 PM: ExecuteNonQuery: DELETE FROM "File" WHERE "ID" NOT IN (SELECT DISTINCT "FileID" FROM "FilesetEntry") took 00:00:00.907

Jun 20, 2018 3:29 PM: Starting - ExecuteNonQuery: DELETE FROM "File" WHERE "ID" NOT IN (SELECT DISTINCT "FileID" FROM "FilesetEntry")

Jun 20, 2018 3:29 PM: ExecuteNonQuery: DELETE FROM "FilesetEntry" WHERE "FilesetID" NOT IN (SELECT DISTINCT "ID" FROM "Fileset") took 00:00:00.160

Jun 20, 2018 3:29 PM: Starting - ExecuteNonQuery: DELETE FROM "FilesetEntry" WHERE "FilesetID" NOT IN (SELECT DISTINCT "ID" FROM "Fileset")

Jun 20, 2018 3:29 PM: ExecuteNonQuery: DELETE FROM "Fileset" WHERE "Timestamp" IN () took 00:00:00.000

Jun 20, 2018 3:29 PM: Starting - ExecuteNonQuery: DELETE FROM "Fileset" WHERE "Timestamp" IN ()

Jun 20, 2018 3:29 PM: [Retention Policy]: All backups to delete:

Jun 20, 2018 3:29 PM: [Retention Policy]: Backups outside of all time frames and thus getting deleted:

Jun 20, 2018 3:29 PM: [Retention Policy]: Backups in this time frame:

Jun 20, 2018 3:29 PM: [Retention Policy]: Next time frame and interval pair: 365.00:00:00 / 31.00:00:00

Jun 20, 2018 3:29 PM: [Retention Policy]: Keeping backup: 5/31/2018 10:50:26 PM

Jun 20, 2018 3:29 PM: [Retention Policy]: Backups in this time frame: 5/31/2018 10:50:26 PM

Jun 20, 2018 3:29 PM: [Retention Policy]: Next time frame and interval pair: 28.00:00:00 / 7.00:00:00

Jun 20, 2018 3:29 PM: [Retention Policy]: Backups in this time frame:

Jun 20, 2018 3:29 PM: [Retention Policy]: Next time frame and interval pair: 7.00:00:00 / 1.00:00:00

Jun 20, 2018 3:29 PM: [Retention Policy]: Backups to consider: 5/31/2018 10:50:26 PM

Jun 20, 2018 3:29 PM: [Retention Policy]: Time frames and intervals pairs: 7.00:00:00 / 1.00:00:00, 28.00:00:00 / 7.00:00:00, 365.00:00:00 / 31.00:00:00

Jun 20, 2018 3:29 PM: [Retention Policy]: Start checking if backups can be removed

Jun 20, 2018 3:29 PM: ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC took 00:00:00.000

Jun 20, 2018 3:29 PM: Starting - ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC

Jun 20, 2018 3:29 PM: ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC took 00:00:00.000

Jun 20, 2018 3:29 PM: Starting - ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC

Jun 20, 2018 3:29 PM: ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC took 00:00:00.000

Jun 20, 2018 3:29 PM: Starting - ExecuteReader: SELECT "ID", "Timestamp" FROM "Fileset" ORDER BY "Timestamp" DESC

Jun 20, 2018 3:29 PM: Async backend wait took 00:12:31.442

Jun 20, 2018 3:29 PM: RemoteOperationNothing took 00:00:00.000

Jun 20, 2018 3:29 PM: Starting - RemoteOperationNothing

Jun 20, 2018 3:29 PM: RemoteOperationPut took 00:01:12.696

Jun 20, 2018 3:29 PM: Backend event: Put - Completed: duplicati-20180620T170236Z.dlist.zip.aes (20.17 MB)

Jun 20, 2018 3:29 PM: Uploaded 20.17 MB in 00:01:12.6941250, 284.08 KB/s

Jun 20, 2018 3:28 PM: Backend event: Put - Started: duplicati-20180620T170236Z.dlist.zip.aes (20.17 MB)

Jun 20, 2018 3:28 PM: Starting - RemoteOperationPut

Jun 20, 2018 3:28 PM: RemoteOperationPut took 00:00:01.469

Jun 20, 2018 3:28 PM: Backend event: Put - Completed: duplicati-i17147ed5352041d0935ca73f55a9e2ba.dindex.zip.aes (380.87 KB)

Jun 20, 2018 3:28 PM: Uploaded 380.87 KB in 00:00:01.4246400, 267.35 KB/s

Jun 20, 2018 3:28 PM: Backend event: Put - Started: duplicati-i17147ed5352041d0935ca73f55a9e2ba.dindex.zip.aes (380.87 KB)

Jun 20, 2018 3:28 PM: Starting - RemoteOperationPut

Jun 20, 2018 3:28 PM: RemoteOperationPut took 00:06:16.422

Jun 20, 2018 3:28 PM: Backend event: Put - Completed: duplicati-b60756f2e98244f7aa8981e58926e6827.dblock.zip.aes (104.01 MB)

Jun 20, 2018 3:28 PM: Uploaded 104.01 MB in 00:06:16.4116530, 282.95 KB/s

Jun 20, 2018 3:22 PM: Backend event: Put - Started: duplicati-b60756f2e98244f7aa8981e58926e6827.dblock.zip.aes (104.01 MB)

Jun 20, 2018 3:22 PM: Starting - RemoteOperationPut

Jun 20, 2018 3:22 PM: RemoteOperationPut took 00:00:00.586

Jun 20, 2018 3:22 PM: Backend event: Put - Completed: duplicati-i4bb367e8de9e4726b4470dd8e5c2e437.dindex.zip.aes (129.87 KB)

Jun 20, 2018 3:22 PM: Uploaded 129.87 KB in 00:00:00.5401890, 240.42 KB/s

Jun 20, 2018 3:22 PM: Backend event: Put - Started: duplicati-i4bb367e8de9e4726b4470dd8e5c2e437.dindex.zip.aes (129.87 KB)

Jun 20, 2018 3:22 PM: Starting - RemoteOperationPut

Jun 20, 2018 3:22 PM: RemoteOperationPut took 00:07:41.732

Jun 20, 2018 3:22 PM: Backend event: Put - Completed: duplicati-bd73448f1083f42b6b44cfcb4ebcf624b.dblock.zip.aes (127.91 MB)

Jun 20, 2018 3:22 PM: Uploaded 127.91 MB in 00:07:41.7029590, 283.68 KB/s

Jun 20, 2018 3:17 PM: Starting - Async backend wait

Jun 20, 2018 3:17 PM: Uploading a new fileset took 00:00:20.830

Jun 20, 2018 3:17 PM: CommitUpdateRemoteVolume took 00:00:00.001

Jun 20, 2018 3:17 PM: Starting - CommitUpdateRemoteVolume

Jun 20, 2018 3:17 PM: CommitUpdateRemoteVolume took 00:00:00.170

Jun 20, 2018 3:17 PM: Starting - CommitUpdateRemoteVolume

Jun 20, 2018 3:16 PM: Starting - Uploading a new fileset

Jun 20, 2018 3:16 PM: VerifyConsistency took 00:00:21.076

Jun 20, 2018 3:16 PM: ExecuteScalarInt64: SELECT COUNT(*) FROM "File" WHERE "BlocksetID" != ? AND "BlocksetID" != ? AND NOT "BlocksetID" IN (SELECT "BlocksetID" FROM "BlocksetEntry") took 00:00:00.561

Jun 20, 2018 3:16 PM: Starting - ExecuteScalarInt64: SELECT COUNT(*) FROM "File" WHERE "BlocksetID" != ? AND "BlocksetID" != ? AND NOT "BlocksetID" IN (SELECT "BlocksetID" FROM "BlocksetEntry")

Jun 20, 2018 3:16 PM: ExecuteScalarInt64: SELECT COUNT(*) FROM (SELECT * FROM (SELECT "N"."BlocksetID", (("N"."BlockCount" + 3200 - 1) / 3200) AS "BlocklistHashCountExpected", CASE WHEN "G"."BlocklistHashCount" IS NULL THEN 0 ELSE "G"."BlocklistHashCount" END AS "BlocklistHashCountActual" FROM (SELECT "BlocksetID", COUNT(*) AS "BlockCount" FROM "BlocksetEntry" GROUP BY "BlocksetID") "N" LEFT OUTER JOIN (SELECT "BlocksetID", COUNT(*) AS "BlocklistHashCount" FROM "BlocklistHash" GROUP BY "BlocksetID") "G" ON "N"."BlocksetID" = "G"."BlocksetID" WHERE "N"."BlockCount" > 1) WHERE "BlocklistHashCountExpected" != "BlocklistHashCountActual") took 00:00:01.937