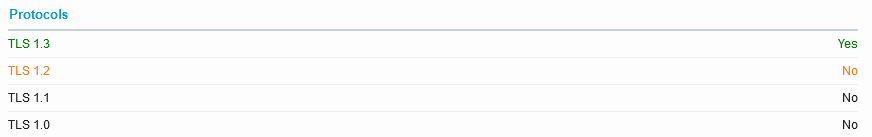

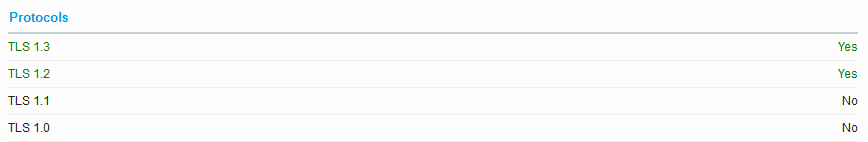

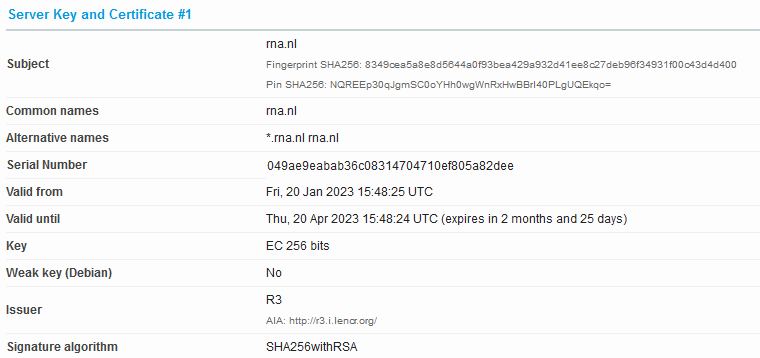

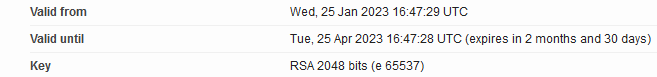

The change to allow TLS 1.2 seemed to coincide with the move away from ECC.

I have some SSL Labs scans still open in browser tabs, so here’s Jan 20 vs. 25:

I wonder if that’s what actually fixed this? At least on Linux, mono does ECC fine.

I don’t have mono on macOS, but if anyone is willing to test, that might add data.

One needs to be a little careful because there are several uses as seen in X.509 (Wikipedia)

Signature Algorithm ID

Public Key Algorithm

These sites run the csharp query fine. Here I’ll show algorithms that openssl saw.

OpenSSL: Get all certificates from a website in plain text was the base of the test.

$ (OLDIFS=$IFS; IFS=':' certificates=$(openssl s_client -connect www.cloudflare.com:443 -showcerts -tlsextdebug 2>&1 </dev/null | sed -n '/-----BEGIN/,/-----END/ {/-----BEGIN/ s/^/:/; p}'); for certificate in ${certificates#:}; do echo $certificate | openssl x509 -noout -text; done; IFS=$OLDIFS) | egrep 'Certificate:|Subject:|Algorithm'

Certificate:

Signature Algorithm: ecdsa-with-SHA256

Subject: C = US, ST = California, L = San Francisco, O = "Cloudflare, Inc.", CN = www.cloudflare.com

Public Key Algorithm: id-ecPublicKey

Signature Algorithm: ecdsa-with-SHA256

Certificate:

Signature Algorithm: sha256WithRSAEncryption

Subject: C = US, O = "Cloudflare, Inc.", CN = Cloudflare Inc ECC CA-3

Public Key Algorithm: id-ecPublicKey

Signature Algorithm: sha256WithRSAEncryption

$ (OLDIFS=$IFS; IFS=':' certificates=$(openssl s_client -connect readthedocs.org:443 -showcerts -tlsextdebug 2>&1 </dev/null | sed -n '/-----BEGIN/,/-----END/ {/-----BEGIN/ s/^/:/; p}'); for certificate in ${certificates#:}; do echo $certificate | openssl x509 -noout -text; done; IFS=$OLDIFS) | egrep 'Certificate:|Subject:|Algorithm'

Certificate:

Signature Algorithm: ecdsa-with-SHA384

Subject: CN = *.readthedocs.org

Public Key Algorithm: id-ecPublicKey

Signature Algorithm: ecdsa-with-SHA384

Certificate:

Signature Algorithm: ecdsa-with-SHA384

Subject: C = US, O = Let's Encrypt, CN = E1

Public Key Algorithm: id-ecPublicKey

Signature Algorithm: ecdsa-with-SHA384

Certificate:

Signature Algorithm: sha256WithRSAEncryption

Subject: C = US, O = Internet Security Research Group, CN = ISRG Root X2

Public Key Algorithm: id-ecPublicKey

Signature Algorithm: sha256WithRSAEncryption

Certificate:

Signature Algorithm: sha256WithRSAEncryption

Subject: C = US, O = Internet Security Research Group, CN = ISRG Root X1

Public Key Algorithm: rsaEncryption

Signature Algorithm: sha256WithRSAEncryption

Certbot issues ECDSA key signed with RSA documents how Let’s Encrypt jumps into RSA R3, e.g.

$ (OLDIFS=$IFS; IFS=':' certificates=$(openssl s_client -connect letsencrypt.org:443 -showcerts -tlsextdebug 2>&1 </dev/null | sed -n '/-----BEGIN/,/-----END/ {/-----BEGIN/ s/^/:/; p}'); for certificate in ${certificates#:}; do echo $certificate | openssl x509 -noout -text; done; IFS=$OLDIFS) | egrep 'Certificate:|Subject:|Algorithm'

Certificate:

Signature Algorithm: sha256WithRSAEncryption

Subject: CN = lencr.org

Public Key Algorithm: id-ecPublicKey

Signature Algorithm: sha256WithRSAEncryption

Certificate:

Signature Algorithm: sha256WithRSAEncryption

Subject: C = US, O = Let's Encrypt, CN = R3

Public Key Algorithm: rsaEncryption

Signature Algorithm: sha256WithRSAEncryption

Certificate:

Signature Algorithm: sha256WithRSAEncryption

Subject: C = US, O = Internet Security Research Group, CN = ISRG Root X1

Public Key Algorithm: rsaEncryption

Signature Algorithm: sha256WithRSAEncryption

If the current default certbot certificate doesn’t work in Duplicati, that’s a big deal, so I dug some more.

Fortunately Linux is looking fine. If life is different on macOS, it might even depend on macOS version.

I’m still wondering, though, if site insistence on TLS 1.3 before was what caused the handshake issue.