Hi!

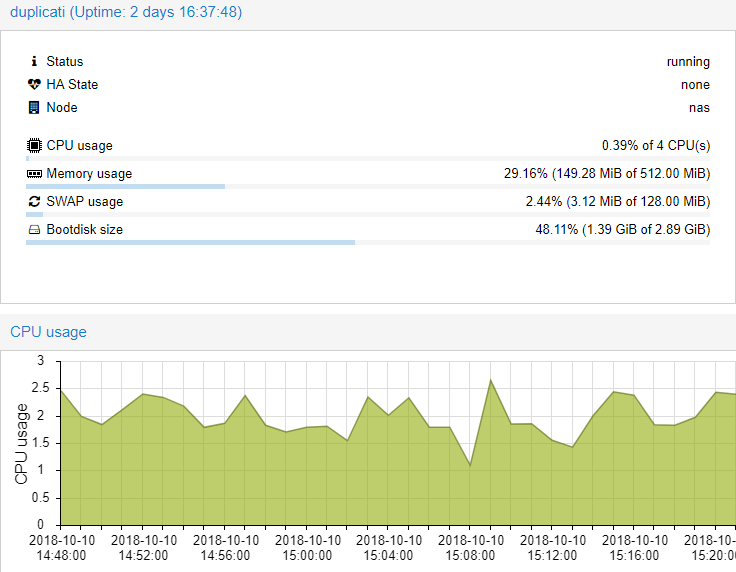

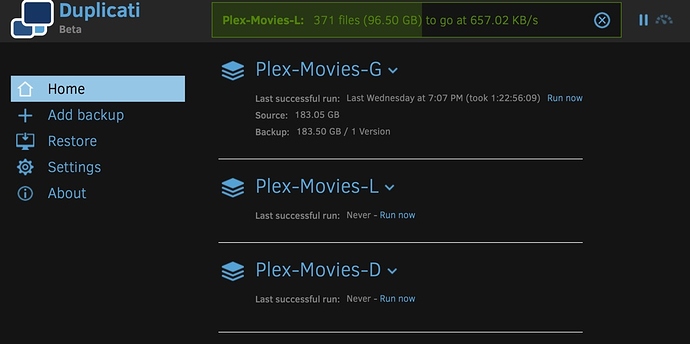

I succesfully uploaded 20 jobs (more than 1.5TB) to B2, but after that, the 21st job simply doesn’t work.

Or, it work, for some time, and then hangs. And after I stop/pause the job, it takes forever and does not start again.

Is there some kind of limit to the number of configured jobs?

Duplicati - 2.0.3.3_beta_2018-04-02

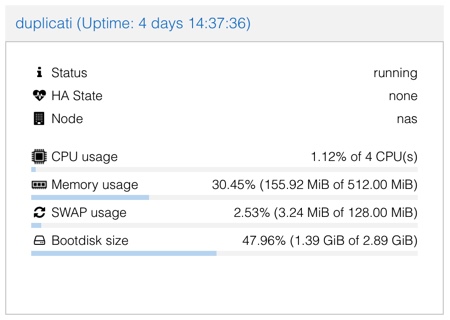

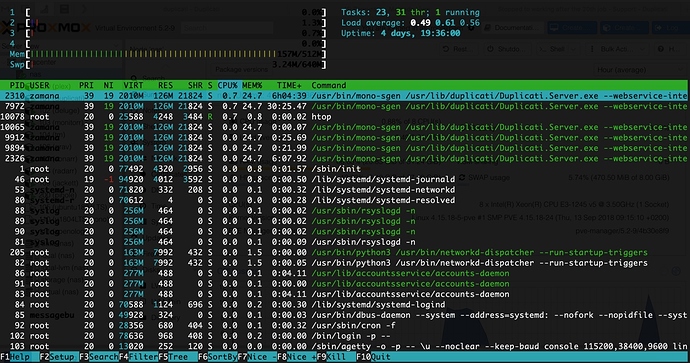

SO: Ubuntu 18.04-1 LTS (inside a Proxmox container)

Mono JIT compiler version 5.14.0.177 (tarball Mon Aug 6 09:07:45 UTC 2018)

Latest error message:

Fatal error

System.Threading.ThreadAbortException: Thread was being aborted.

at (wrapper managed-to-native) System.Threading.WaitHandle.Wait_internal(intptr*,int,bool,int)

at System.Threading.WaitHandle.WaitOneNative (System.Runtime.InteropServices.SafeHandle waitableSafeHandle, System.UInt32 millisecondsTimeout, System.Boolean hasThreadAffinity, System.Boolean exitContext) [0x00044] in <2943701620b54f86b436d3ffad010412>:0

at System.Threading.WaitHandle.InternalWaitOne (System.Runtime.InteropServices.SafeHandle waitableSafeHandle, System.Int64 millisecondsTimeout, System.Boolean hasThreadAffinity, System.Boolean exitContext) [0x00014] in <2943701620b54f86b436d3ffad010412>:0

at System.Threading.WaitHandle.WaitOne (System.Int64 timeout, System.Boolean exitContext) [0x00000] in <2943701620b54f86b436d3ffad010412>:0

at System.Threading.WaitHandle.WaitOne (System.Int32 millisecondsTimeout, System.Boolean exitContext) [0x00019] in <2943701620b54f86b436d3ffad010412>:0

at System.Threading.WaitHandle.WaitOne () [0x00000] in <2943701620b54f86b436d3ffad010412>:0

at Duplicati.Library.Main.BackendManager+FileEntryItem.WaitForComplete () [0x00000] in <ae134c5a9abb455eb7f06c134d211773>:0

at Duplicati.Library.Main.BackendManager.List () [0x0003b] in <ae134c5a9abb455eb7f06c134d211773>:0

at Duplicati.Library.Main.Operation.FilelistProcessor.RemoteListAnalysis (Duplicati.Library.Main.BackendManager backend, Duplicati.Library.Main.Options options, Duplicati.Library.Main.Database.LocalDatabase database, Duplicati.Library.Main.IBackendWriter log, System.String protectedfile) [0x0000d] in <ae134c5a9abb455eb7f06c134d211773>:0

at Duplicati.Library.Main.Operation.FilelistProcessor.VerifyRemoteList (Duplicati.Library.Main.BackendManager backend, Duplicati.Library.Main.Options options, Duplicati.Library.Main.Database.LocalDatabase database, Duplicati.Library.Main.IBackendWriter log, System.String protectedfile) [0x00000] in <ae134c5a9abb455eb7f06c134d211773>:0

at Duplicati.Library.Main.Operation.BackupHandler.PreBackupVerify (Duplicati.Library.Main.BackendManager backend, System.String protectedfile) [0x000fd] in <ae134c5a9abb455eb7f06c134d211773>:0

at Duplicati.Library.Main.Operation.BackupHandler.Run (System.String[] sources, Duplicati.Library.Utility.IFilter filter) [0x003c6] in <ae134c5a9abb455eb7f06c134d211773>:0

]

]