Looking in prior reports, I see Canary was being used in June 2019. Maybe it hit problem below:

dblock put retry corrupts dindex, using old dblock name for index – canary regression #3932

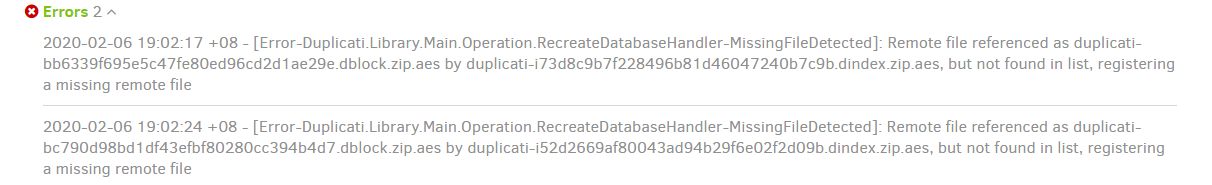

2019-09-30 18:21:34 -04 - [Error-Duplicati.Library.Main.Operation.RecreateDatabaseHandler-MissingFileDetected]: Remote file referenced as duplicati-b53f2fc838c5e4d08b98125d606b36445.dblock.zip by duplicati-i1e699e9790774a88a06f9211e03711d6.dindex.zip, but not found in list, registering a missing remote file

looks a lot like the MissingFileDetected errors seen in original post. The bug was in Canary from about v2.0.4.16-2.0.4.16_canary_2019-03-28 to v2.0.4.31-2.0.4.31_canary_2019-10-19. Release note said:

Fixed a retry error where uploaded dindex -files would reference non-existing dblock files, thanks @warwickmm

but it would be hard to say without some deep research. Canary does have its risks, but those who can tolerate some risk and report issues early help keep the bugs from moving into later channels like Beta.

I don’t know if you did that this time, but if you did not, the Recreate will probably have the same errors, assuming the problem is actually in the remote files. Newer Duplicati shouldn’t add any further errors…

You can (I think), but it’s awkward.

Duplicati.GUI.TrayIcon.exe is designed to allow multiple copies, probably mainly with multiple users on servers in mind, but it can also be used multiple times by one user if you can keep databases separate, for example by adding --server-datafolder to one to override its usual location in your profile’s AppData. One quirk is that somehow they collide if in the same browser. Workaround is to use a second browser.

Next challenge is that Canary updates need to be kept away from the Beta browser, and I’m not sure it happens automatically by different channel. If not, set AUTOUPDATER_Duplicati_SKIP_UPDATE=true before starting the Beta one installed from a .msi should keep it from picking up any Canary updates…

Duplicati autoupdater puts updates in a different location, so what happens is Program Files one starts, then launches the latest update as a child. So Program Files can launch Canary for one side of the test while running as itself (no child) as Beta side. It won’t have the usual autoupdates, but Beta is not often.