I have a multi-terrabyte backup on amazon cloud drive and I wonder if duplicati would recognize it if I transferred it directly from Amazon to Backblaze B2 using Multcloud? That would save me an incredible amount of time for uploading all that crap again…

If you’re asking whether or not MOVING your archive (dblock, etc.) files from Amazon to B2 and pointing your existing backup job to the new destination would work, then the answer is yes. (Bonus - you get to keep your existing historical versions!)

If you’re asking if you can do a COPY of the destination files and “clone” your existing backup job to a 2nd job and point it to the new location (ending up with two jobs backing up to two different destinations), my GUESS is you’ll run into issues with local sqlite (dbpath) naming conflicts (but I could be wrong on that - I’m not exactly sure what triggers a new sqlite file name).

I don’t see any problems with moving. If Multicloud can do it without using your machine as a middle-layer, it could be fast-ish.

(I assume you just want to change the backend/destination for the backup).

Okay, so in principle it’s possible. That’s good to know. Now let’s see if it makes sense in my case. Here are some potential caveats:

- I have already deleted the backup job that was backing up to Amazon

- I have also deleted the database because I was unable to repair it (see our conversation on gitter earlier this year)

- The total size of the archive is 10 TB and I’m not sure why it’s so much (I didn’t care so much on the amazon unlimited plan) because I don’t have more than maybe 7-8TB uncompressed data. So I would want to weed out that backup once it is transferred (don’t have write access to Amazon anymore). Is that possible? Like: delete all versions of a file except for latest and delete all versions of files in folder ABC?

n00b question: Is there any more to moving a backup dest other than 1) move the files and 2) point the job to the new location?

Nope. That should really be all that’s needed.

Yes, but if the ultimate goal is to reduce destination space usage there will be downloads / re-uploads involved as Duplicati will need to download the archive (dblock) files to remove the deleted versions from the archives.

Note that I believe there is a destination side tool available for this kind of maintenance if you are able to run code at your destination.

Alternatively, if you have access to a location with a higher speed connection than normal you might want to consider pausing you source backup then using a portable install of Duplicati to do the cleanup work over your faster connection.

Since you’re coming from Amazon maybe you could get a temporary “server” there just to do the cleanup before moving things.

Lastly, for both you and the other poster who asked, it might be a good idea to create a small test backup to your current provider which you can then use to do a practice move to the new provider.

Edit - Paranoia:

I should note that wasted space cleanup (like deleting file versions) does involve decrypting and uncompressing the archive (dblock) files meaning that for the duration of the process your BLOCK (not necessarily individual file) information will be saved un-encrypted wherever the cleanup is running.

So if you’re super paranoid you might to take the hit on bandwidth and time to keep the processing local on your own machines.

You can work around this a bit. If you are only interested in saving space by deleting stuff, you can set --threshold=100 to only “compact” files that are 100% wasted (i.e. fully unused files). This will still remove stuff after you delete the sets, but without trying to download/rebuild/upload volumes that have some unused blocks.

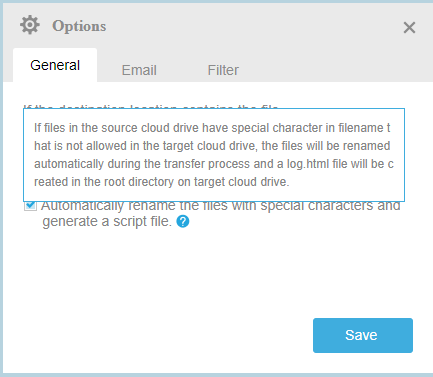

Here is another potential caveat. Multcloud has this option when transferring files between cloud storages:

(What you are seeing is the option “Automatically rename the files with special characters and generate a script file,” as well as the mouse-over popup for that option.

I don’t know whether there might be any special characters on my amazon cloud drive that are not allowed on Backblaze (I’d assume that this is not the case), but what happens if files are renamed. I suppose that renders them completely useless?

Unless they’re renamed back, they’ll be worse than useless:

- They’ll cause “unexpected files found” errors (I think a cleanup resolves that)

- The renamed files (and ONLY those) will have to be re-generated by the client and re-uploaded (I’m not sure, but this might potentially involve loss of historical versions, though NOT of current file contents)

All that being said, unless you included goofy characters in a prefix setting, Destination dblock file names created by Duplicate are formatted something like “duplicati-” plus a 33 character hex string (so 0-9 and a-f) plus “.dindex.” plux the compression type “zip” or “7z” and encryption type (if used) like “.aes”. For example: duplicati-i9bf5425674cd4613847c58c6d2454422.dindex.7z.aes

The dindex file names are simply something like this duplicati-20170823T024101Z.dlist.zip.aes. So in both cases, nothing unusual is used by default, so you should be safe.

Well, but as long as the backup will still work for those files and versions that are not affected, it’s still okay. I’m still saving piles of upload time compared to loading everything up again. ![]()

Yep. Duplicati’s design is pretty robust in terms of recovering what it can even from a backup missing both config and some destination files.

I expect a straight move will go quite smoothly, it’s only the cleanup step that makes it messy. And with how relatively cheap (or free) an AWS VPS can be, in your instance even that should be achievable pretty easily.

Please let us know how it goes and what steps you use when you try it. As time goes on and cloud providers come / go / change rates I expect others will be wanting to do the same thing.

Looking at what I have in terms of filenames in my B2 and Onedrive backups (as well as Duplicati ´s naming scheme), I really doubt this would be an issue. Frankly, a cloud that cannot support these filenames would be damn near useless…

Just an idea: if you want to have some more control over the process, get a VPS and use rclone. Scaleway is probably the cheapest option for something like that and has worked splendidly for mirroring stuff from OneDrive to B2 for me.

For someone who is not really an expert in cloud computing and server management (though it’s fascinating how much you can do by googling), I’m not sure how much control I’d actually have ![]()

But nonetheless, thanks for the idea. I will consider it, especially since my MultCloud transfers keep failing (Waiting for a response from their support).

However, it looks like Rclone is not the right tool for this particular transfer as Amazon has apparently blocked it for Cloud Drive:

Possibly with Proxy for Amazon Cloud Drive - howto - rclone forum you could still use rclone. Not having ACD I can´t really try though.

Yes, I saw that but tbh, this is getting way too complicated to accomplish what I’m trying to do…

I guess ultimately it depends on how fast your own connection is and how urgently you want to move the data… Cheap VPS can probably manage 100-150mbit/s with rclone (that´s what I got out of scaleway, anyway), some have gigabit even but I am a bit sceptical the CPU would be up to that.

Okay, you’re right. I’m a lazy but and I should see it as a learning exercise. I will look into it. But is rclone the only tool suitable for this kind of transfer?

Was that with the ARM or Intel cores? Or does it matter?

Edit: Sorry, I realize that the ARM is a bare metal server and you were talking about VPS. I get it.

Well it´s the only multi cloud command line tool I found (then again, I did not look for very long). So if you want to use a VPS to accelerate the process, it seems like the perfect solution.

The goal is to get the destination (dblock, & dindex) files from one cloud to the other, preferably without going through your local machine, right? If you get a short term VPS running and can connect to both the source and destination using it then you should be able to use whatever file transfer tools you’re familiar with.