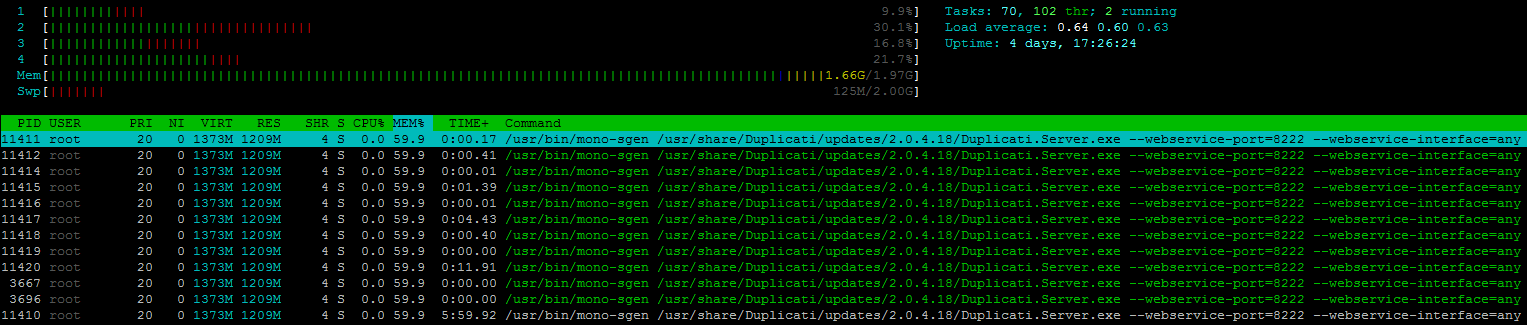

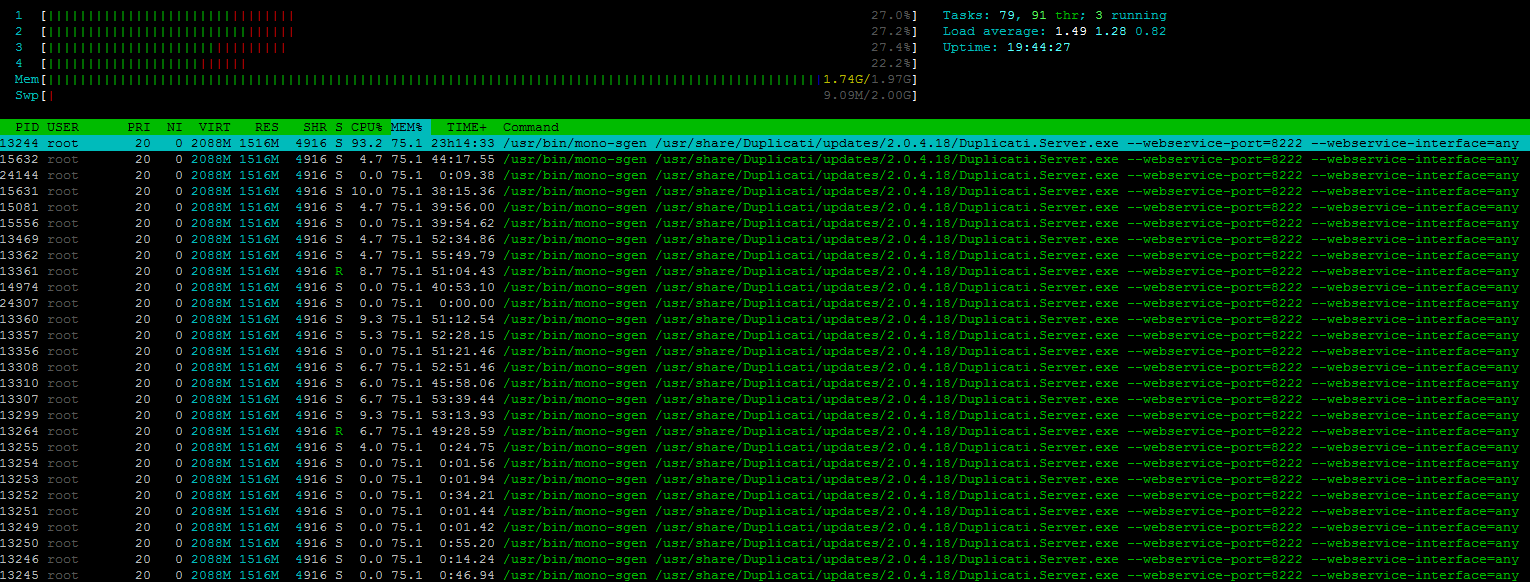

Hello. I have problem with backup on Debian 9 (Duplicati - 2.0.4.18_canary_2019-05-12) — while verification in process, memory is leaking. After that (when server hasn’t memory) duplicati crashes.

mono /usr/lib/duplicati/Duplicati.CommandLine.exe backup onedrivev2://backup/doc /mnt/media/doc/ --backup-name=doc --dbpath=/root/.config/Duplicati/71877079907668816966.sqlite --encryption-module=aes --compression-module=zip --dblock-size=500MB --exclude-files-attributes=temporary --disable-module=console-password-input

And I cannot restore selected files:

{ "RestoredFiles": 0, "SizeOfRestoredFiles": 0, "RestoredFolders": 0, "RestoredSymlinks": 0, "PatchedFiles": 0, "DeletedFiles": 0, "DeletedFolders": 0, "DeletedSymlinks": 0, "MainOperation": "Restore", "RecreateDatabaseResults": null, "ParsedResult": "Error", "Version": "2.0.4.18 (2.0.4.18_canary_2019-05-12)", "EndTime": "2019-05-14T06:58:15.085786Z", "BeginTime": "2019-05-14T06:47:53.765557Z", "Duration": "00:10:21.3202290", "MessagesActualLength": 16, "WarningsActualLength": 0, "ErrorsActualLength": 3, "Messages": [ "2019-05-14 10:47:54 +04 - [Information-Duplicati.Library.Main.Controller-StartingOperation]: The operation Restore has started", "2019-05-14 10:48:58 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Started: ()", "2019-05-14 10:49:08 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: List - Completed: (186 bytes)", "2019-05-14 10:49:11 +04 - [Information-Duplicati.Library.Main.Database.LocalRestoreDatabase-SearchingBackup]: Searching backup 0 (5/9/2019 10:50:27 AM) ...", "2019-05-14 10:49:14 +04 - [Information-Duplicati.Library.Main.Operation.RestoreHandler-RemoteFileCount]: 1 remote files are required to restore", "2019-05-14 10:49:14 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes (360.26 MB)", "2019-05-14 10:50:54 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Retrying: duplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes (360.26 MB)", "2019-05-14 10:51:04 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes (360.26 MB)", "2019-05-14 10:52:44 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Retrying: duplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes (360.26 MB)", "2019-05-14 10:52:54 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes (360.26 MB)", "2019-05-14 10:54:34 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Retrying: duplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes (360.26 MB)", "2019-05-14 10:54:44 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes (360.26 MB)", "2019-05-14 10:56:24 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Retrying: duplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes (360.26 MB)", "2019-05-14 10:56:34 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Started: duplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes (360.26 MB)", "2019-05-14 10:58:14 +04 - [Information-Duplicati.Library.Main.BasicResults-BackendEvent]: Backend event: Get - Failed: duplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes (360.26 MB)", "2019-05-14 10:58:15 +04 - [Information-Duplicati.Library.Main.Operation.RestoreHandler-RestoreFailures]: Failed to restore 2 files, additionally the following files failed to download, which may be the cause:\nduplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes" ], "Warnings": [], "Errors": [ "2019-05-14 10:58:14 +04 - [Error-Duplicati.Library.Main.Operation.RestoreHandler-PatchingFailed]: Failed to patch with remote file: \"duplicati-b685d9add203f4056b7cc7c8315fd5c77.dblock.zip.aes\", message: A task was canceled.", "2019-05-14 10:58:15 +04 - [Error-Duplicati.Library.Main.Operation.RestoreHandler-RestoreFileFailed]: Could not find file \"/mnt/media/doc/books/programming/prepro/Khaggarti_R_-_Diskretnaya_matematika_dlya_progr.pdf\".", "2019-05-14 10:58:15 +04 - [Error-Duplicati.Library.Main.Operation.RestoreHandler-RestoreFileFailed]: Could not find file \"/mnt/media/doc/books/programming/assembler/Туториалы Iczelion`а на русском (MASM для Windows).chm\"." ], "BackendStatistics": { "RemoteCalls": 6, "BytesUploaded": 0, "BytesDownloaded": 0, "FilesUploaded": 0, "FilesDownloaded": 0, "FilesDeleted": 0, "FoldersCreated": 0, "RetryAttempts": 4, "UnknownFileSize": 0, "UnknownFileCount": 0, "KnownFileCount": 186, "KnownFileSize": 47833600082, "LastBackupDate": "2019-05-09T14:50:27+04:00", "BackupListCount": 2, "TotalQuotaSpace": 5497558138880, "FreeQuotaSpace": 4769961182450, "AssignedQuotaSpace": -1, "ReportedQuotaError": false, "ReportedQuotaWarning": false, "MainOperation": "Restore", "ParsedResult": "Success", "Version": "2.0.4.18 (2.0.4.18_canary_2019-05-12)", "EndTime": "0001-01-01T00:00:00", "BeginTime": "2019-05-14T06:47:53.765584Z", "Duration": "00:00:00", "MessagesActualLength": 0, "WarningsActualLength": 0, "ErrorsActualLength": 0, "Messages": null, "Warnings": null, "Errors": null } }

Could you help me? I think, I should reduce size of block to 100 MB, but it doesn’t help with restoring.

Thanks.