Do you get the same error on the same file even after having removed it from source?

Can you try a restore of that file to see if all is OK?

Yes, same error on same file after removing it from the source drive. The error is thrown only a few minutes after the backup is started. Perhaps the check is consistency between local and remote databases and hasn’t even looked at the file set yet?

When I created the backup set, I started with a subset of the filesystem branch I was following. It completed successfully, so I selected the entire branch and let it run. It’s been performing the backup for the past month with a couple of glitches that were solved by a repair and continue. This is the first repair/continue cycle that didn’t help. I mention all of this because the files that show as available to restore are only those from the last complete backup run. All those added since are not showing in the listing.

What I would try (not being an expert):

- take a backup of the db of your job

- check which is the dblock with the id 1356. You will need to check this in the db. If you don’t know how, let us know.

- move that file out of the remote folder.

- I guess a list-broken-files will give output now.

- if it does, purge them, and see if it worked out.

What is strange however is that the files shown are only those uploaded in the last job. This could be a result of the error, but not sure at all. Therefore: do take a backup of your db (not the config db but the job db).

I’ve been spelunking in the db using DB Browser for SQLite. I’m not grasping the structure quickly enough to find what I’m looking for. I guess a little help in finding the dblock would be welcome.

Don’t have the structure in front of me either. But there should be sth referring to the dblock as tables I assume? Might be able to check later this evening.

Not sure if I’m on the right track. The Remotevolume table record with ID 1356 corresponds to an index file. I understood you to mean I should move a dblock file. Am I understanding this correctly?

Sorry, tried to check the DB structure myself, but can’t find my way around either.

It’s definitely not the dindex file, as your error refers to a blocksetid. The remotevolume index is not the index in the error.

@kenkendk: is there a db description available somewhere?

@scottm,

Can you try to run below query on your DB:

SELECT Remotevolume.Name FROM Remotevolume

INNER JOIN Block ON (Block.VolumeID=Remotevolume.ID)

INNER JOIN BlocksetEntry ON (Block.ID=BlocksetEntry.BlockID)

WHERE BlocksetID=1356;

Query based on this wiki post: Local database format · duplicati/duplicati Wiki · GitHub

This should give you the filename that you should move out of the remote repository. I believe after this, you should run list-broken-files (which now should report this file), and purge-broken-files.

Be sure to first take a backup of your db!

You are quite right, I simply do not have enough resources to follow up on all the issues that people experience. It is simply a case of being more popular than what we have resources for.

If you cannot tolerate some manual hand-holding, then you should not use Duplicati. It is specifically labelled “beta” because we are aware of issues like this and others. I have yet to see a data-loss issue, but there are issues where you would need the recovery tool to get the data back, and that is not how I would like it to be.

If you look at the release notes for the latest two builds, you will see that we are shifting towards having more active developers, so I am no longer the bottleneck.

Since it is all free, I guess you get more than what you paid for ![]() . If you have a setup that requires timely responses, you can always get paid support for a number of commercial products.

. If you have a setup that requires timely responses, you can always get paid support for a number of commercial products.

Support here is based on whatever spare time other people are willing to contribute.

For the catastrophic restore scenario, we do have some tools that might be slow and low in features, but quite robust:

I ran the query, which returned 0 rows. Digging deeper, there is no BlocksetID of 1356 in the BlocksetEntry table. This may well explain why the dbsize is returning 0.

To be clear, I haven’t modified the database in any way. The only query I ran was the SELECT query you provided.

Working backwards, the BlocksetID 1356 doesn’t appear in the Files table, either. The place inside the table that 1356 would exist makes sense alphabetically. Running the query against an adjacent BlocksetID returns a list of remote files, as expected. Since I had removed the offending file from the source folder, this makes sense.

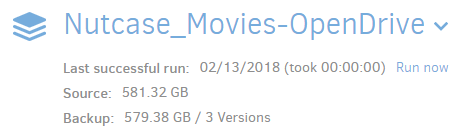

Apparently, this consistency check is comparing against something else, perhaps a remote file. Remember, too, that the files reported by the restore are significantly behind reality. Duplicati reports:

while the remote folder containing the backups total 1.2TB.

Hopefully, there is a clue for someone somewhere in this information. I’m happy to keep working this. I’ve got several machines backing up successfully. This large media share is the only problem I’m having.

It has to be referenced somewhere… If it is nowhere in the db it had to be in the remote. But I don’t believe duplicati checks the content of the remotes.

When exactly do you get this error? Maybe enable profiling to see where in the process this happens?

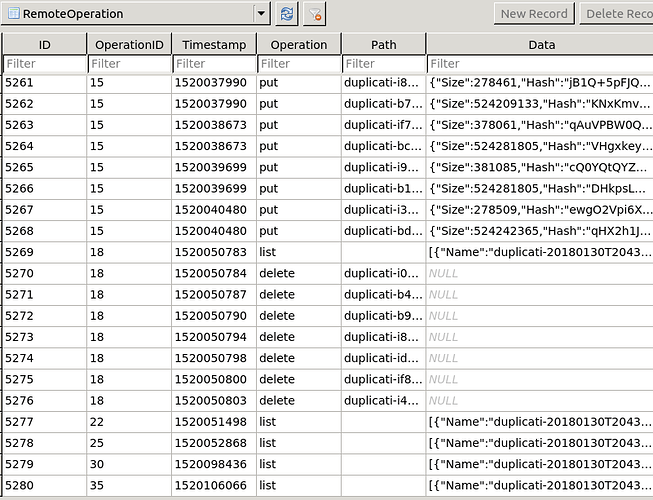

I believe there is also interesting info in the remote operation table. Do you see something there?

I guess the info is in the bug report. But haven’t had the chance to download it.

The error happens approx 2 minutes after starting a backup run. The status of the GUI shows “Starting” at the top when the error is thrown.

I don’t know how to enable profiling. There seems to be traceback info in the error message quoted in my original post.

The remote operation table’s last entries appear to be a long result from a “list” operation in the form of

{“Name”:“duplicati-20180209T191002Z.dlist.zip.aes”,“LastAccess”:“2018-02-12T21:44:07-07:00”,“LastModification”:“2018-02-12T21:44:07-07:00”,“Size”:131613,“IsFolder”:false},

{“Name”:“duplicati-b000a58ef947e4a0792ca9f9c240fa2de.dblock.zip.aes”,“LastAccess”:“2018-02-09T04:14:17-07:00”,“LastModification”:“2018-02-09T04:14:17-07:00”,“Size”:524255741,“IsFolder”:false},

This doesn’t really mean anything to me. Ideas?

A bit more information.

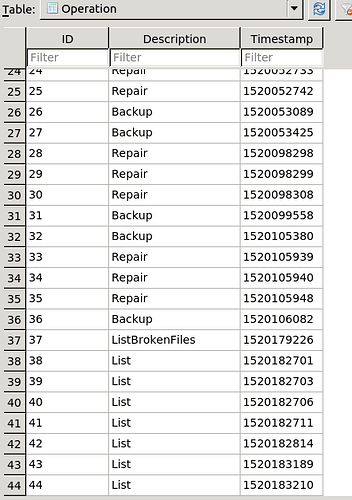

Those final “list” remote operations appear to be the result of “repair” operations in the “Operations” table. The list-broken-files operation didn’t result in any remote operations. Here are the tail ends of the two tables.

Assuming I understand the relationships between these tables, it looks like the backup isn’t looking at the remote data at all at the time it throws the error. I wish I could figure out how to convert the time stamp info to real time. It might be helpful in figuring this out.

Timestamps can be easily converted, just google unix timestamp converter.

Can you upgrade to the latest canary? Not that it will solve your problem, but it might as well do… It’s a pretty stable release and has several fixes wrt. your version.

I have the same problem, now updated to latest canary2.0.2.20_canary_2018-02-27. I don’t know if the problem appeared with an earlier version:

Found inconsistency in the following files while validating database: /home/imma/Vídeos/zzzz.MTS, actual size 949813248, dbsize 0, blocksetid: 87322 . Run repair to fix it.

Any idea of how to proced?

Thanks.

I updated to canary. As expected, a repeat of the repair/backup steps yielded the same (failed) result.

Not really new information, just closing the loop.

I have noticed that table Blockset has an entry for 1356 but there is indeed no remote file linked to that entry (at least not via the table BlocksetEntry.

I guess that is one of the problems. But I have no idea how to fix this.

This is out of my knowledge… Hope @kenkendk can have a look at this.