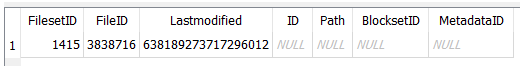

Calculated (a.k.a. found) is 261396, based on query from profiling log, adjusting it for FilesetID 1415.

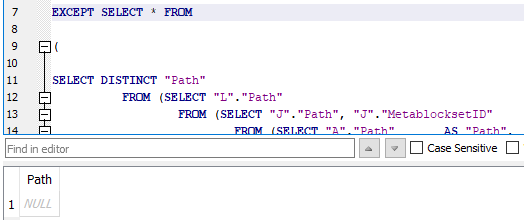

SELECT COUNT(*) FROM (SELECT DISTINCT "Path" FROM (

SELECT

"L"."Path",

"L"."Lastmodified",

"L"."Filelength",

"L"."Filehash",

"L"."Metahash",

"L"."Metalength",

"L"."BlocklistHash",

"L"."FirstBlockHash",

"L"."FirstBlockSize",

"L"."FirstMetaBlockHash",

"L"."FirstMetaBlockSize",

"M"."Hash" AS "MetaBlocklistHash"

FROM

(

SELECT

"J"."Path",

"J"."Lastmodified",

"J"."Filelength",

"J"."Filehash",

"J"."Metahash",

"J"."Metalength",

"K"."Hash" AS "BlocklistHash",

"J"."FirstBlockHash",

"J"."FirstBlockSize",

"J"."FirstMetaBlockHash",

"J"."FirstMetaBlockSize",

"J"."MetablocksetID"

FROM

(

SELECT

"A"."Path" AS "Path",

"D"."Lastmodified" AS "Lastmodified",

"B"."Length" AS "Filelength",

"B"."FullHash" AS "Filehash",

"E"."FullHash" AS "Metahash",

"E"."Length" AS "Metalength",

"A"."BlocksetID" AS "BlocksetID",

"F"."Hash" AS "FirstBlockHash",

"F"."Size" AS "FirstBlockSize",

"H"."Hash" AS "FirstMetaBlockHash",

"H"."Size" AS "FirstMetaBlockSize",

"C"."BlocksetID" AS "MetablocksetID"

FROM

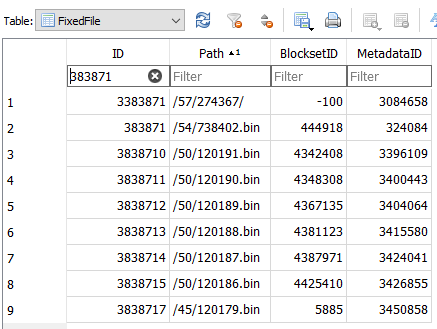

"FixedFile" A

LEFT JOIN "Blockset" B

ON "A"."BlocksetID" = "B"."ID"

LEFT JOIN "Metadataset" C

ON "A"."MetadataID" = "C"."ID"

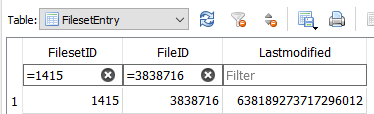

LEFT JOIN "FilesetEntry" D

ON "A"."ID" = "D"."FileID"

LEFT JOIN "Blockset" E

ON "E"."ID" = "C"."BlocksetID"

LEFT JOIN "BlocksetEntry" G

ON "B"."ID" = "G"."BlocksetID"

LEFT JOIN "Block" F

ON "G"."BlockID" = "F"."ID"

LEFT JOIN "BlocksetEntry" I

ON "E"."ID" = "I"."BlocksetID"

LEFT JOIN "Block" H

ON "I"."BlockID" = "H"."ID"

WHERE

"A"."BlocksetId" >= 0 AND

"D"."FilesetID" = 1415 AND

("I"."Index" = 0 OR "I"."Index" IS NULL) AND

("G"."Index" = 0 OR "G"."Index" IS NULL)

) J

LEFT OUTER JOIN

"BlocklistHash" K

ON

"K"."BlocksetID" = "J"."BlocksetID"

ORDER BY "J"."Path", "K"."Index"

) L

LEFT OUTER JOIN

"BlocklistHash" M

ON

"M"."BlocksetID" = "L"."MetablocksetID"

) UNION SELECT DISTINCT "Path" FROM (

SELECT

"G"."BlocksetID",

"G"."ID",

"G"."Path",

"G"."Length",

"G"."FullHash",

"G"."Lastmodified",

"G"."FirstMetaBlockHash",

"H"."Hash" AS "MetablocklistHash"

FROM

(

SELECT

"B"."BlocksetID",

"B"."ID",

"B"."Path",

"D"."Length",

"D"."FullHash",

"A"."Lastmodified",

"F"."Hash" AS "FirstMetaBlockHash",

"C"."BlocksetID" AS "MetaBlocksetID"

FROM

"FilesetEntry" A,

"FixedFile" B,

"Metadataset" C,

"Blockset" D,

"BlocksetEntry" E,

"Block" F

WHERE

"A"."FileID" = "B"."ID"

AND "B"."MetadataID" = "C"."ID"

AND "C"."BlocksetID" = "D"."ID"

AND "E"."BlocksetID" = "C"."BlocksetID"

AND "E"."BlockID" = "F"."ID"

AND "E"."Index" = 0

AND ("B"."BlocksetID" = -100 OR "B"."BlocksetID" = -200)

AND "A"."FilesetID" = 1415

) G

LEFT OUTER JOIN

"BlocklistHash" H

ON

"H"."BlocksetID" = "G"."MetaBlocksetID"

ORDER BY

"G"."Path", "H"."Index"

))

The query is multi-purpose, and far more than needed to get a count, so next step might be to simplify.

You can see that it’s looking at lots of things though, and one of those things may have changed lately.