The slowest running bit seems to be the INSERT into sqllite,very bursty,and the duplicati process is only using 25% of cpu - dunno if there’s anything to tweak there. The machine isn’t getting stressed by any measure

Sqlite is not using very efficiently all cores it’s well known.

Your bursty INSERT are probably the ones I have tried to avoid with the change you are currently testing.

The work left for ‘normal’ dblock (the ones for which the index files are correct) is only searches (select) that could run faster with greater cache - if tables are too big for available memory (db cache), the database engine has to create temp resources on disk and join them.

Pass 3 of 3, processing blocklist volume 3109 of 7481

Your bursty INSERT are probably the ones I have tried to avoid with the change you are currently testing.

The work left for ‘normal’ dblock (the ones for which the index files are correct) is only searches (select) that could run faster with greater cache - if tables are too big for available memory (db cache), the database engine has to create temp resources on disk and join them.

Yeah these seem to be very intensive timewise

Starting - ExecuteNonQuery: INSERT INTO “Block” (“Hash”, “Size”, “VolumeID”) SELECT “FullHash” AS “Hash”, “Length” AS “Size”, -1 AS “VolumeID” FROM (SELECT “A”.“FullHash”, “A”.“Length”, CASE WHEN “B”.“Hash” IS NULL THEN ‘’ ELSE “B”.“Hash” END AS “Hash”, CASE WHEN “B”.“Size” is NULL THEN -1 ELSE “B”.“Size” END AS “Size” FROM (SELECT DISTINCT “FullHash”, “Length” FROM (SELECT “BlockHash” AS “FullHash”, “BlockSize” AS “Length” FROM ( SELECT “E”.“BlocksetID”, “F”.“Index” + (“E”.“BlocklistIndex” * 3200) AS “FullIndex”, “F”.“BlockHash”, MIN(102400, “E”.“Length” - ((“F”.“Index” + (“E”.“BlocklistIndex” * 3200)) * 102400)) AS “BlockSize”, “E”.“Hash”, “E”.“BlocklistSize”, “E”.“BlocklistHash” FROM ( SELECT * FROM ( SELECT “A”.“BlocksetID”, “A”.“Index” AS “BlocklistIndex”, MIN(3200 * 32, (((“B”.“Length” + 102400 - 1) / 102400) - (“A”.“Index” * (3200))) * 32) AS “BlocklistSize”, “A”.“Hash” AS “BlocklistHash”, “B”.“Length” FROM “BlocklistHash” A, “Blockset” B WHERE “B”.“ID” = “A”.“BlocksetID” ) C, “Block” D WHERE “C”.“BlocklistHash” = “D”.“Hash” AND “C”.“BlocklistSize” = “D”.“Size” ) E, “TempBlocklist_9776885F6C1EE34DACA52241B65841F9” F WHERE “F”.“BlocklistHash” = “E”.“Hash” ORDER BY “E”.“BlocksetID”, “FullIndex” ) UNION SELECT “BlockHash”, “BlockSize” FROM “TempSmalllist_EE75DD5954A7E640B9DA11FDB9840265” )) A LEFT OUTER JOIN “Block” B ON “B”.“Hash” = “A”.“FullHash” AND “B”.“Size” = “A”.“Length” ) WHERE “FullHash” != “Hash” AND “Length” != “Size”

Yes that’s it, these are the huge queries that are taking weeks.

*Days now

Thanks for the assist,once the Repair’s finished we’ll see if the DB is usable or if I need a Repair and Rebuild and go from there

Great,first job done

I wonder if before attempting to fix something, you could grab one of the damaged block file and the associated index file (can be found from the database with the IndexBlockLink table), decrypt them if necessary, then open them as zip file, list the content of dblock file (file names), display the (lone) json file under the vol/ subdirectory in the index file, and display the list of files in the list/ subdir in the index file. It could bring (maybe) some light in the kind of damage your system has had, and (hopefully) could lead to insight on the root cause.

List Broken Files seems to have the exact same list of files on each Recovery Point that has an issue

6/26/2023 5:00:00 PM (476 match(es))

7/3/2023 9:37:36 AM (476 match(es))

7/5/2023 3:02:13 AM (476 match(es))

7/6/2023 5:00:00 PM (476 match(es))

Requesting one of the listed files listed from the source path gives a readable file,and I don’t recall errors on the backup jobs for it,so this is a bit of a mystery

Without seeing what’s inside the ‘bad’ files as I said in my previous post, I can’t speculate on what could have happened.

Does “requesting” mean a restore? If so, you should use this option to test correctly:

Duplicati will attempt to use data from source files to minimize the amount of downloaded data. Use this option to skip this optimization and only use remote data.

Don’t restore over a valuable source file of course – use a different folder without any such file already.

In terms of studying the issue, are you comfortable making a copy of your database and looking inside (with guidance), for example with DB Browser for SQLite? If not, linking to a DB bug report might let us (view is not as good, and it’s sanitized for privacy, but it’s one option and there are a few other options).

I’m going to have to take a break and revisit debugging this. I don’t mind checking the DB when I have time again or giving you access to take a look at it if rapid response is preferred

If the former leave the steps/guide and i’ll check in when I get to it ![]()

Too bad I’d had liked to have a go at understanding the why of backends severely damaged like this one. My money is on compact but not a big amount ![]() . Seeing how it is damaged could be enlightening (or not but if one don’t see it there is no chance to know)

. Seeing how it is damaged could be enlightening (or not but if one don’t see it there is no chance to know)

Like I said I don’t mind giving another go at it when I have another gap. I’m keeping a copy of the storage and the repaired-ish sqlite DB to poke at

An update here,after the repair/rebuild the backup job is running again and the versioning seems to indicate the expected number

I still have a copy of the broken SQLite with a copy of canary and a copy of the destination file store for when I have time to take a stab at it

@gpatel-fr do you have a post with the steps to find the bad data in the SQLite somewhere?

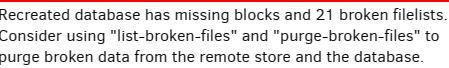

Is this what had list-broken-files files? I’d still worry about that one. Also see note on restore test.

Which one is this? The one that finished in days thanks to special help, or some partial earlier effort?

What’s it on? It might be useful to look at. Easy access would be best. Ideal is unencrypted local files…

Earlier, I was plugging a Python script which examines dlist and dindex files to look for missing data.

Nice thing about it is that it can be changed or made very verbose if we need to track something down.

We’re pretty sure some blocks got lost somehow, but the history view is limited. Database has a bit too, although I’m not sure if there’s any troubleshooting flow chart to lead right to an answer of how it broke. Finding out how it’s currently broken is easier, especially if you got list-broken-files to name paths.

I think these days you need to raise console-log-level up or you don’t get the output you might want.

Maybe someone else has a better answer. I’m trying to understand what resources we have to look at…

Before that, was everything running fine, then the surprise was recreate issue? I wish we could forecast.

The bad data is not so much in the Sqlite database, it’s on the backend.

Assume one of the bad datablock is named duplicati-b231b21ca170042aeb26b60ce885f5849.dblock.zip.aes.

Run in the repaired database:

select name from remotevolume where id = (select indexvolumeid from indexblocklink where blockvolumeid=(select id from remotevolume where name='duplicati-b231b21ca170042aeb26b60ce885f5849.dblock.zip.aes')):

this should give you an index file name.

Grab these 2 files and unencrypt them using SharpAESCrypt.exe:

SharpAESCrypt.exe d youraeskey duplicati-b231b21ca170042aeb26b60ce885f5849.dblock.zip.aes duplicati-b231b21ca170042aeb26b60ce885f5849.dblock.zip

the same with the index file.

You should get 2 zip files. Unzip them (use 2 distinct directories to not mix the 2 files) and list the result (using dir) for the dblock file here, and for the index file post here the content of the list/ subdirectory (using dir), and the content (using ‘type’) of the file in the vol/ subdirectory.

Is this what had

list-broken-filesfiles? I’d still worry about that one. Also see note on restore test.

Yes

I’m yet to run a restore test,it’s also on the to-do

Which one is this? The one that finished in days thanks to special help, or some partial earlier effort?

finished in days

What’s it on? It might be useful to look at. Easy access would be best. Ideal is unencrypted local files…

Unencrypted local files on an external disk here

Earlier, I was plugging a Python script which examines

dlistanddindexfiles to look for missing data.

Nice thing about it is that it can be changed or made very verbose if we need to track something down.

I need to get to load Python on this Windows desktop and take a look at this too

Before that, was everything running fine, then the surprise was recreate issue? I wish we could forecast.

0 complaints,some backup errors on occasion a source folder went MIA or a backup was interrupted but a re-run would go through fine

I am currently reviewing the compact failure problem and just noticed Recreate also works, but it downloads dblock files, which might be slow on a larger backup. so the root cause of many of these reports about repairs taking days may be just that, and the defective index files holding just a Manifest like it was said in the linked thread ![]()

’

Are the Compacts automatic? Because i’ve never run one