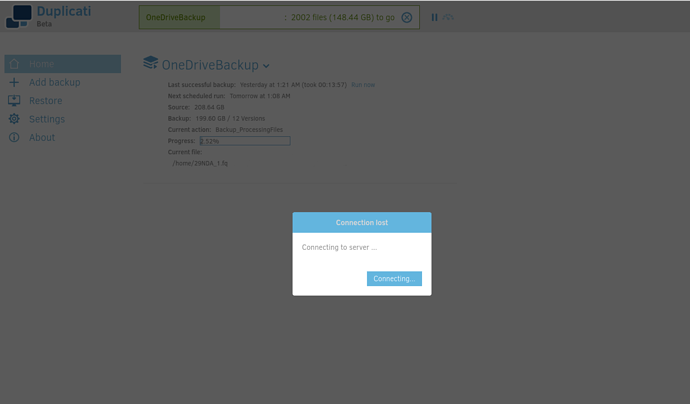

Whenever I try to backup multiple files totaling greater than 200gb, I get a connection error about half way though. Is there anything I can check or do to fix this? This issue does not occur when just checking through an existing backup.

Welcome to the forums MooCow27.

200GB isn’t much and many people are backing up way more than that (multiple TB) so size shouldn’t be the cause.

What’s running the backup? A PC, Mac, Linux, NAS?

Any errors listed in the log file would probably be helpful.

Thanks for the help, I am on a Linux system working to backup a virtual drive mounted through rclone. Duplicati works fine for backing up small changes, but it seems on these larger backups it always stops somewhere in between and says connecting. It can’t be that the system itself if idling as it normally runs at 1am and completes fine. It also seems to stop on larger files, like here I think it was trying to back up a ~50gb file, but I need to confirm that. Also to get Duplicati to work again after these connection lost I need to completely restart the system. The only errors that have ever come up in the past while using this are associated with certificate errors, but I don’t think that is related to this. Any advice? Thanks!

Are you trying to use a mounted cloud service (Google Drive) as the source and another (OneDrive) as the destination?

If so that would explain things, Duplicati wants/needs the source data to be local. You can backup to a cloud service but not from a cloud service.

I am trying to backup a cloud drive (OneDrive) to a local hard drive. What it should be doing is copying chunks over to memory first them saving them to the local drive. Maybe that is a bit a buggy though? I mean it works all the time with saving small updates, but it doesn’t like when I do lots of file changes or have to do a new initial save instance.

Sadly, that is the wrong direction for Duplicati, as you say it should or seems to work but at the end of the day it won’t work reliably.

Some day, maybe Duplicati could be made to back up a cloud service but as it stands, it cannot.

That may very well be the case, but what causes the connection issue? There has to be a cause behind it

The cause is simple, it wasn’t made to work that way. There are likely many technical reasons why it won’t work properly in this scenario.

If I had to speculate at one of many likely issues would be that while virtual drives “look right” they are not the same as real drives and they live in RAM. If you had a TB of RAM then maybe it would work a bit better but I’m doubtful you have a TB of RAM in that machine. Ok, you prolly don’t need a whole TB but you’d need more RAM than your largest file size + room for overhead and all the other programs on the computer. So if you have a 50GB file that you wanted to backup, you would probably need around 55GB RAM in that computer, giving 5GB to the OS/APPS. Maybe if you didn’t use deduplication that would change things, I don’t know…

I do know you’re not the first and probably won’t be the last to ask. As others have been advised, it’s just not how Duplicati works, it would require a massive rewrite to make that kind of feature functional.

How’s your C# knowledge? If you want to volunteer to help rewrite the backup engine then have at it, we could sure use the help. Volunteers are in short supply and as such development of those sorts of features are quite slow to be implemented.

A quick google search for “backup onedrive to external drive” shows a few different programs that will make a backup of your OneDrive. I can’t recommend any but you have a few options.

Do you have Duplicati installed as a systemd service running as root? That would be typical.

systemctl status duplicati shows status, maybe messages. Or watch journalctl -f

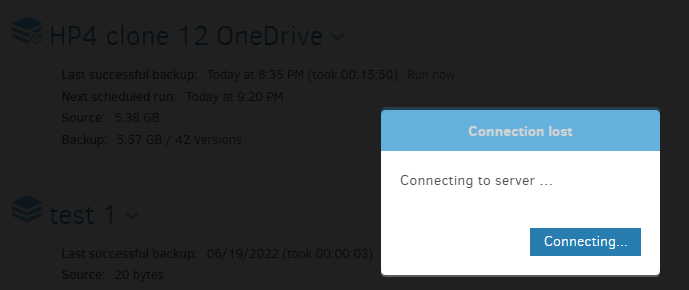

What happens if you go to Duplicati in a new tab when the old tab says Connection lost?

systemctl start duplicati should start Duplicati, unless some problem grew persistent.

I’m suspicious of the virtual drive too, but let’s try to find what’s up and what’s not (then why).

If overloading the drive is part of it, there are some throttle controls that can slow writing to it.

You could also try to catch where About → Show log → Live → Retry ends (if it ends), or set

log-file=<path> log-file-log-level=Retry to do the same thing without relying on a working GUI.

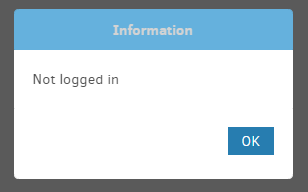

The reason I ask is that if you have a GUI password set, sometimes it times out and looks like:

Contrast is low, but in this case that’s a bit of my Duplicati home screen probably idle) under popup.

If this is what you’re seeing (still need the other tests), then browser refresh will unconfuse the GUI.

If Duplicati is down when you refresh or try http://localhost:8200/, browser can’t get web page.

That makes sense why it was erroring out for larger files then, as the bulk of my files are small. I wonder then, could I simply fix this by assigning larger swap files, as in like using a chunk of a hard drive as ram?