The following error looks like upload in the SSHNET library didn’t finish quickly enough.

Looking at its source code, it looks like there’s one 30 second timeout used very widely.

Unlike the http timeout, it’s not for total transfer, but times out smaller pieces of it.

Duplicati can set SSHNET timeout higher, but it can’t overcome a serious network failure.

- Febr. 2019 05:10: Failed while executing “Backup” with id: 1

…

—> (Inner Exception #1) System.AggregateException: One or more errors occurred. —> Renci.SshNet.Common.SshOperationTimeoutException: Session operation has timed out

…

at Renci.SshNet.SftpClient.UploadFile (System.IO.Stream input, System.String path, System.Action`1[T] uploadCallback) [0x00000] in <502177f7126d48e4be01a83c8cdb4c79>:0

at Duplicati.Library.Backend.SSHv2.Put (System.String remotename, System.IO.Stream stream) [0x00012] in <92780ed9b8c74a0ab4415f69daf23b10>:0

The deletions seen look somewhat familiar to me. Duplicati is cleaning up files that had

upload failures. Retries are done until this file uploads or the retry limit is reached.

When a file finally uploads, the ones that didn’t upload are not needed, so are deleted.

What’s a little non-obvious is the retry is done under a different name to avoid bugs in

some servers. You can see a retry in Job --> Show log --> Remote by clicking on the line

to see if has the same Size and Hash as the previous put. If so, it’s probably the same.

14. Febr. 2019 08:40: delete duplicati-b50b6375edffb4740a3af98cdc4554014.dblock.zip.aes

14. Febr. 2019 08:40: delete duplicati-bd43e925259994b7f95a765b071942c78.dblock.zip.aes

14. Febr. 2019 08:40: delete duplicati-bdb54b57f1dd14d0194bfd5a330ee1a5a.dblock.zip.aes

14. Febr. 2019 08:40: delete duplicati-b913a909572e3454c93ba592f6623b67c.dblock.zip.aes

14. Febr. 2019 08:40: delete duplicati-bb637e6a866134985a9744a84a0cec14b.dblock.zip.aes

14. Febr. 2019 08:40: delete duplicati-be6367301c09b474b9edaf16988eacd5b.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-b17596617ef624f89a57a522e0952e0e3.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-b1f9066d978664320a83df7644d69b737.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-bbda01d07828447469f51b118be465223.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-be0f824b25ba943318e3b01edfbe81de5.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-b196a6593893e4aa0ac9e7840e37ff309.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-bb0e9396f551a4953950129ed10c968d0.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-b175742496b684c4c8dc69660ccec9c38.dblock.zip.aes

14. Febr. 2019 05:11: list

Above were the list to see what’s there, and the deletes to remove the now-excess files.

Below I’ll take each delete and match it up to the put (or series of puts) uploading it.

I don’t have full data, but repeated put repeats of the same type are a sign of retries.

The try # is a guess. I expected 5, got 6. You can check Size and Hash to confirm retry.

14. Febr. 2019 08:40: delete duplicati-b50b6375edffb4740a3af98cdc4554014.dblock.zip.aes

14. Febr. 2019 05:10: put duplicati-bb637e6a866134985a9744a84a0cec14b.dblock.zip.aes

14. Febr. 2019 05:08: put duplicati-be6367301c09b474b9edaf16988eacd5b.dblock.zip.aes

14. Febr. 2019 05:06: put duplicati-b50b6375edffb4740a3af98cdc4554014.dblock.zip.aes (try 4?)

14. Febr. 2019 08:40: delete duplicati-bd43e925259994b7f95a765b071942c78.dblock.zip.aes

14. Febr. 2019 05:10: put duplicati-bb637e6a866134985a9744a84a0cec14b.dblock.zip.aes

14. Febr. 2019 05:08: put duplicati-be6367301c09b474b9edaf16988eacd5b.dblock.zip.aes

14. Febr. 2019 05:06: put duplicati-b50b6375edffb4740a3af98cdc4554014.dblock.zip.aes

14. Febr. 2019 05:04: put duplicati-bd43e925259994b7f95a765b071942c78.dblock.zip.aes (try 3?)

14. Febr. 2019 08:40: delete duplicati-bdb54b57f1dd14d0194bfd5a330ee1a5a.dblock.zip.aes

14. Febr. 2019 05:10: put duplicati-bb637e6a866134985a9744a84a0cec14b.dblock.zip.aes

14. Febr. 2019 05:08: put duplicati-be6367301c09b474b9edaf16988eacd5b.dblock.zip.aes

14. Febr. 2019 05:06: put duplicati-b50b6375edffb4740a3af98cdc4554014.dblock.zip.aes

14. Febr. 2019 05:04: put duplicati-bd43e925259994b7f95a765b071942c78.dblock.zip.aes

14. Febr. 2019 05:02: put duplicati-bdb54b57f1dd14d0194bfd5a330ee1a5a.dblock.zip.aes (try 2?)

14. Febr. 2019 08:40: delete duplicati-b913a909572e3454c93ba592f6623b67c.dblock.zip.aes

14. Febr. 2019 05:10: put duplicati-bb637e6a866134985a9744a84a0cec14b.dblock.zip.aes

14. Febr. 2019 05:08: put duplicati-be6367301c09b474b9edaf16988eacd5b.dblock.zip.aes

14. Febr. 2019 05:06: put duplicati-b50b6375edffb4740a3af98cdc4554014.dblock.zip.aes

14. Febr. 2019 05:04: put duplicati-bd43e925259994b7f95a765b071942c78.dblock.zip.aes

14. Febr. 2019 05:02: put duplicati-bdb54b57f1dd14d0194bfd5a330ee1a5a.dblock.zip.aes

14. Febr. 2019 04:55: put duplicati-b913a909572e3454c93ba592f6623b67c.dblock.zip.aes (try 1?)

14. Febr. 2019 08:40: delete duplicati-bb637e6a866134985a9744a84a0cec14b.dblock.zip.aes

14. Febr. 2019 05:10: put duplicati-bb637e6a866134985a9744a84a0cec14b.dblock.zip.aes (try 6?)

14. Febr. 2019 08:40: delete duplicati-be6367301c09b474b9edaf16988eacd5b.dblock.zip.aes

14. Febr. 2019 05:10: put duplicati-bb637e6a866134985a9744a84a0cec14b.dblock.zip.aes

14. Febr. 2019 05:08: put duplicati-be6367301c09b474b9edaf16988eacd5b.dblock.zip.aes (try 5?)

14. Febr. 2019 05:11: delete duplicati-b17596617ef624f89a57a522e0952e0e3.dblock.zip.aes

14. Febr. 2019 04:16: put duplicati-bf52956e8b83a46d9875d38d78b3d99c3.dblock.zip.aes

14. Febr. 2019 04:10: put duplicati-b17596617ef624f89a57a522e0952e0e3.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-b1f9066d978664320a83df7644d69b737.dblock.zip.aes

13. Febr. 2019 04:17: put duplicati-ba9798c78f9e943fb8ce701cb2bb15fc1.dblock.zip.aes

13. Febr. 2019 04:09: put duplicati-b1f9066d978664320a83df7644d69b737.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-bbda01d07828447469f51b118be465223.dblock.zip.aes

12. Febr. 2019 04:18: put duplicati-b453ef39c07b3411db8dcbc6ecbc80608.dblock.zip.aes

12. Febr. 2019 04:17: put duplicati-bbda01d07828447469f51b118be465223.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-be0f824b25ba943318e3b01edfbe81de5.dblock.zip.aes

11. Febr. 2019 04:19: put duplicati-bfd4a5a0f1b0c4b6ab9febe2fdd5b1684.dblock.zip.aes

11. Febr. 2019 04:17: put duplicati-be0f824b25ba943318e3b01edfbe81de5.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-b196a6593893e4aa0ac9e7840e37ff309.dblock.zip.aes

10. Febr. 2019 04:21: put duplicati-b708b181f7f33469bb6230acd15cc3c72.dblock.zip.aes

10. Febr. 2019 04:18: put duplicati-b196a6593893e4aa0ac9e7840e37ff309.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-bb0e9396f551a4953950129ed10c968d0.dblock.zip.aes

9. Febr. 2019 04:22: put duplicati-b5ef6f2fabd3a4ab6b4f6c8ae0be287a8.dblock.zip.aes

9. Febr. 2019 04:22: put duplicati-bb0e9396f551a4953950129ed10c968d0.dblock.zip.aes

14. Febr. 2019 05:11: delete duplicati-b175742496b684c4c8dc69660ccec9c38.dblock.zip.aes

8. Febr. 2019 04:23: put duplicati-b968ec355103a46d8ba3296f088c649ae.dblock.zip.aes

8. Febr. 2019 04:22: put duplicati-b175742496b684c4c8dc69660ccec9c38.dblock.zip.aes

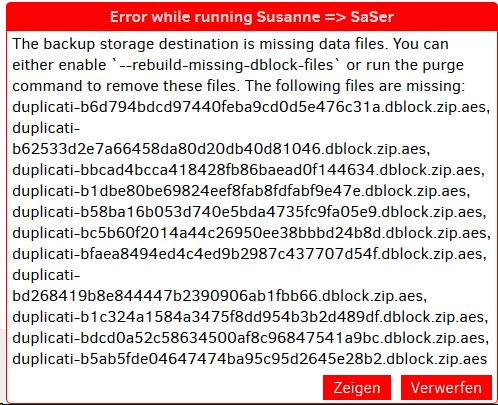

The 11 missing files list in the red notification names the first 11 dblock files put.

I’m not sure why they’re missing unless there are some deletes or loss not in the log.

Perhaps you could look on the destination to see whether they’re present but not seen.

Or perhaps the database bug report could be saved somewhere, then shared using a link.

Or you could open it with a DB browser and look at the Remoteoperation table yourself.

Just having the logs so far has been helpful to get some idea of what’s going on here.

6. Febr. 2019 17:18: put duplicati-b6d794bdcd97440feba9cd0d5e476c31a.dblock.zip.aes

6. Febr. 2019 17:26: put duplicati-b62533d2e7a66458da80d20db40d81046.dblock.zip.aes

6. Febr. 2019 17:34: put duplicati-bbcad4bcca418428fb86baead0f144634.dblock.zip.aes

6. Febr. 2019 17:43: put duplicati-b1dbe80be69824eef8fab8fdfabf9e47e.dblock.zip.aes

6. Febr. 2019 17:55: put duplicati-b58ba16b053d740e5bda4735fc9fa05e9.dblock.zip.aes

6. Febr. 2019 18:07: put duplicati-bc5b60f2014a44c26950ee38bbbd24b8d.dblock.zip.aes

6. Febr. 2019 18:19: put duplicati-bfaea8494ed4c4ed9b2987c437707d54f.dblock.zip.aes

6. Febr. 2019 18:31: put duplicati-bd268419b8e844447b2390906ab1fbb66.dblock.zip.aes

6. Febr. 2019 18:42: put duplicati-b1c324a1584a3475f8dd954b3b2d489df.dblock.zip.aes

6. Febr. 2019 18:50: put duplicati-bdcd0a52c58634500af8c96847541a9bc.dblock.zip.aes

6. Febr. 2019 19:02: put duplicati-b5ab5fde04647474ba95c95d2645e28b2.dblock.zip.aes

Assuming these are the default 50MB dblock files that take about 8 minutes, that’s an

uplink speed of maybe 900 kilobits/second. Is that what you’re expecting? You did say

1 megabit, so maybe that’s expected. The problem is that it might also be unreliable.

The occasional retries early on are slightly worrisome, but the fatal end is more so.

You could also run ping tests to see if packets are being lost. That can slow things.

Raising --ssh-operation-timeout to 10 minutes (probably 10m) might allow more retries

at the TCP level (if network goes bad) while reducing the retries Duplicati must try.

C:\Program Files\Duplicati 2>Duplicati.CommandLine.exe help ssh-operation-timeout

--ssh-operation-timeout (Timespan): Sets the operation timeout value

Use this option to manage the internal timeout for SSH operations. If this

options is set to zero, the operations will not time out

* default value: 0

C:\Program Files\Duplicati 2>

I’m not sure I agree with the “will not time out” part. Your backup didn’t agree either.

Unfortunately, I doubt I can lead you through making repair fix everything up nicely.

There was an effort to rewrite that code to improve it. I’m not sure where it stands.

Unless someone else jumps in with confidence, it might be best to repeat that backup.

I think you could probably wipe out the destination and the job database and restart.

Probably doing an Export in advance would be best in case you need to Import instead.

Please also test and tune your network as well as possible to avoid a failure midway.

Initial backup is possibly a special case, but a normal backup interruption should do

resumption on next backup after a scan to check the backup status so it can continue.