Hello,

please take a look on provided screenshot.

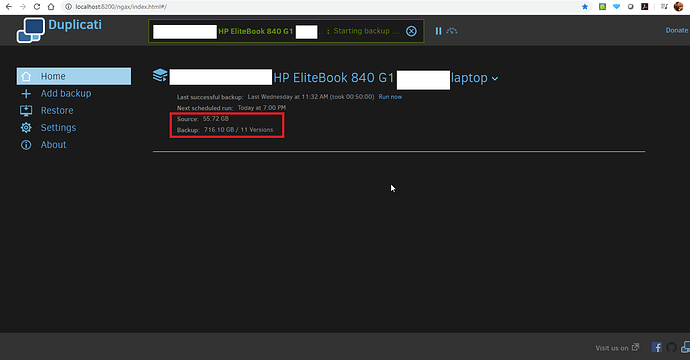

It shows huge disparity between source size and backup size, making me little bit scared regarding correctness of backup data.

For source is set whole C drive 1 TB size, including Windows and excluding some volatile folders like TEMP.

The backup process durration also went down from 5h daily to 0.75h daily.

Any advice how to proceed? Does anyone have SQL to list what is in source file set, disregarding GUI presented information?

Regards

What do you expect to see for the source size? How much data is on your C: drive? (I understood the “1TB” as being the size of the disk, not the amount of data on it.)

On page 3 click the three-dot menu to the right of “Source data” and select “Show advanced editor.” It will display your selections in text format.

Also while on that page, look at the Filters and Exclude sections to see if you have anything specified there.

Hello,

thank you for reply.

I expect source size to be overall size of all files under current definition of source (not the file size of last uploaded backup session), in my case around 800GB of 1TB.

Anyhow I have tracked the issue with failing snapshot creation ([GLOBALROOT error code 21]) and cca. 65500 warnings regarding errors to access from AlphaVSS.Common.dll.

Looks like that 16GB of RAM in 2020 is not sufficient.

Regards

I doubt it’s a RAM issue. Duplicati on Windows doesn’t seem to use much - on mine it maxes out at about 150MB. It is more on other platforms due to the mono runtime.

We should troubleshoot why Duplicati is failing to create a snapshot. But even if that fails, it should still be able to back up most data. Only files that are locked will be skipped when the snapshot fails.