Former Crashplan (CP) Home user here. I found Duplicati as a useful and powerful alternative to CP. Not as easy to use, yet, but with forums and threads like this, it’ll get there.

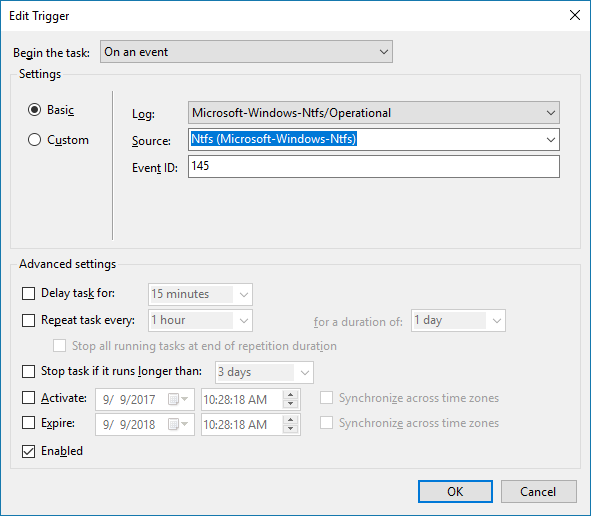

W10 and Duplicati 2 user. I have a backup set going to a normally detached USB HDD. I found a Windows event that is triggered when plugging in the HDD (see picture).

I have a Windows Task Scheduler set to trigger a script to run when this event happens. The script is a very simple BAT file containing the export to command line output of my already-set-up backup set. The script works, and the backup starts when I plug in my drive. The problem I have is that I think it is building another remote backup set instead of using the one established from the web interface. The script-triggered backup was taking way longer to run than the quick one I did via the web interface, and the free space on my drive kept decreasing! It would be great to have it use the same set.

I hope this helps someone with more coding experience than I have.