I think this phase includes cleaning up after any deletion, including the compact in any of its forms.

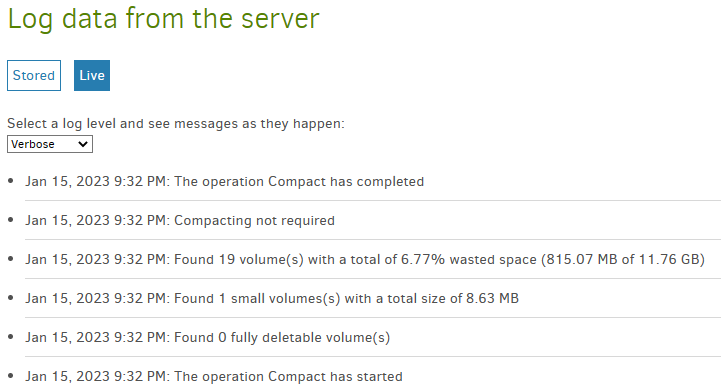

There is a lot more information available in About → Show log → Live → Information. An example:

2023-01-09 08:51:54 -05 - [Verbose-Duplicati.Library.Main.Database.LocalDeleteDatabase-FullyDeletableCount]: Found 0 fully deletable volume(s)

2023-01-09 08:51:54 -05 - [Verbose-Duplicati.Library.Main.Database.LocalDeleteDatabase-SmallVolumeCount]: Found 2 small volumes(s) with a total size of 8.89 MB

2023-01-09 08:51:54 -05 - [Verbose-Duplicati.Library.Main.Database.LocalDeleteDatabase-WastedSpaceVolumes]: Found 29 volume(s) with a total of 10.55% wasted space (1.28 GB of 12.15 GB)

2023-01-09 08:51:54 -05 - [Information-Duplicati.Library.Main.Database.LocalDeleteDatabase-CompactReason]: Compacting because there is 10.55% wasted space and the limit is 10%

...

2023-01-09 08:56:50 -05 - [Information-Duplicati.Library.Main.Operation.CompactHandler-CompactResults]: Downloaded 31 file(s) with a total size of 1.25 GB, deleted 62 file(s) with a total size of 1.25 GB, and compacted to 12 file(s) with a size of 264.32 MB, which reduced storage by 50 file(s) and 1,013.13 MB

Above is actually in log-file format. Live log is reverse-chronological, and Information level would only show the highlights. Verbose shows more, and there are levels beyond that, for those who need details.

The cited 10% limit is because I have threshold=10 percent rather than the default 25 to compact often, doing less each time, which is what people do when they’re trying to stay below free limit, i.e. 1 GB/day.

meaning even a small portion of that needing to compact will cause a large download. Only other option besides letting compact run is to not let it run, which means storage used-and-charged grows to the sky. Compromise solution might be to let compact run, but either lower threshold or expect a large download.

Not very relevant. Compact happens not by putting data in, but having existing data age away over time. When a backup version is deleted by your retention policy, any data in no other version becomes waste. Please read these:

Compacting files at the backend

The COMPACT command

no-auto-compact

threshold

This value is a percentage used on each volume and the total storage.

which means sometimes hitting the default 25% triggers a space inspection of your quite large backup, potentially causing a lot of action. The 14 GB that bothers you is less than 1%, and your trigger is 25%. Setting threshold to a very very low value can cause problems, but you might want to lower the setting.

You could, if you like, set it to a larger value, then run a Compact now while watching notices in live log. Testing that, it seemed like Information level wasn’t saying enough, so I went to Verbose and got these:

If your wasted space is above 25%, that explains why it’s doing compact. Volume count might also be whatever’s over the threshold on the per-volume view, but I’m less certain, though it seems very likely.

An alternative test would be to set no-auto-compact to see if backup runs, maybe deletes, and ends, however you can’t stay there forever unless you don’t care about running up a really large storage bill.

Verifying backend files

The TEST command

A sample consists of 1 dlist, 1 dindex, 1 dblock.

It’s also in the job log as Test phase, and in Complete log as Test results, naming the actual files.

How are you seeing download amount, and what does the current file reference mean. Is this in live log? Compact has a very recognizable pattern of a bunch of dblock downloads, a dblock upload consolidating downloaded dblock files having too much waste (and I think dindex upload goes with that dblock upload), then a lot of deletes of most of the just recently downloaded dblock files and their associated dindex files.

I haven’t tried cancel in the middle. I’m glad that backup seems to have survived, but stats seem missing. They’re usually result stats done at the end. Compact never got to the end, so maybe that explains zeros.

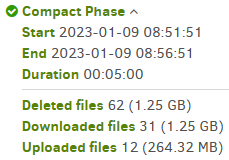

Here’s a more typical log and Complete log:

"CompactResults": {

"DeletedFileCount": 62,

"DownloadedFileCount": 31,

"UploadedFileCount": 12,

"DeletedFileSize": 1339502534,

"DownloadedFileSize": 1337742531,

"UploadedFileSize": 277154502,

"Dryrun": false,

"VacuumResults": null,

"MainOperation": "Compact",

"ParsedResult": "Success",

"Version": "2.0.6.104 (2.0.6.104_canary_2022-06-15)",

"EndTime": "2023-01-09T13:56:51.7248468Z",

"BeginTime": "2023-01-09T13:51:51.5633202Z",

"Duration": "00:05:00.1615266",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null,

"BackendStatistics": {

"RemoteCalls": 116,

"BytesUploaded": 328322339,

"BytesDownloaded": 1391097722,

"FilesUploaded": 16,

"FilesDownloaded": 34,

"FilesDeleted": 64,

"FoldersCreated": 0,

"RetryAttempts": 0,

"UnknownFileSize": 111792,

"UnknownFileCount": 1,

"KnownFileCount": 469,

"KnownFileSize": 9171592353,

"LastBackupDate": "2023-01-09T08:50:01-05:00",

"BackupListCount": 62,

"TotalQuotaSpace": 0,

"FreeQuotaSpace": 0,

"AssignedQuotaSpace": -1,

"ReportedQuotaError": false,

"ReportedQuotaWarning": false,

"MainOperation": "Backup",

"ParsedResult": "Success",

"Version": "2.0.6.104 (2.0.6.104_canary_2022-06-15)",

"EndTime": "0001-01-01T00:00:00",

"BeginTime": "2023-01-09T13:50:00.0141386Z",

"Duration": "00:00:00",

"MessagesActualLength": 0,

"WarningsActualLength": 0,

"ErrorsActualLength": 0,

"Messages": null,

"Warnings": null,

"Errors": null

}

},

You can see that compact downloaded 31 files out of 34 total downloads. The other 3 were the Test.

EDIT:

If you decide it’s doing a compact, there’s probably no direct path to doing a lot of smaller compacts because going straight to a low threshold will make an even huger initial one than you’re set to now. Possibly lots of smaller compacts will wind up as a larger download bill, so keep that in mind as well.

EDIT 2:

Compact - Limited / Partial might allow more successful frequent smaller compacts. Currently setting threshold low on the overall backup also sets it low on each volume, so could compact more of them. There are other feature requests for a time limit and a bandwidth limit, and concerns of falling behind.

EDIT 3:

Changing threshold to different higher-than-currently-wasted changes Compact now stats. 10 vs. 95:

Found 19 volume(s) with a total of 6.71% wasted space (815.07 MB of 11.86 GB)

Found 14 volume(s) with a total of 5.47% wasted space (664.72 MB of 11.86 GB)

Multiplying by your remote volume size “might” tell you the amount of downloading you should expect.

EDIT 4:

Sentence above still looks reasonable. Current value is below, and extrapolating to 10% gives 1.2 GB download compared to posted normal actual above of 1.25 GB. Extrapolation is easier for this backup because it generates a fairly fixed amount of new and unique data from file changes each run, and as version retention removes versions, this leads to a fairly steady accumulation of unneeded data waste.

Jan 16, 2023 10:05 AM: Found 23 volume(s) with a total of 8.43% wasted space (1.02 GB of 12.06 GB)

Linear extrapolation: 1.02 GB * 10% / 8.43% = 1.21 GB

Size based on 50 MB remote volumes: 23 * 50 MB = 1.15 GB but seemingly not all of it is waste space.

Download will be larger than wasted space because volumes contain still-in-use data to be compacted.

Volumes that have managed to become 100% wasted space are deleted. There’s nothing useful there.

So there’s how to forecast how much the compact might do, so you can plan when you want to run it…