I recently setup backup job to Amazon Drive (several hundred GB). For the first 300 or so GB the upload went fine. But since this morning, the progress bar has not moved at all. For now, I am assuming that this is a problem on Amazon’s side (perhaps some kind of rate limiting or whatever). My issue here is that even if I wanted to report this problem as a duplicati issue, the duplicati UI is not helping me with that at all. Here is what I did

- I checked the logs: nothing (last entry is from two days ago, and unrelated).

- I tried live log: information: nothing.

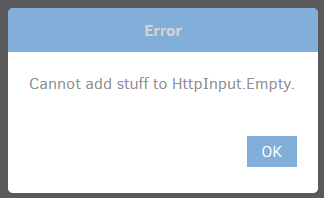

- I tried to stop the job by clicking the X in the progress bar. It asked me whether I want to wait until the current file is uploaded or stop immediately. I chose, wait for upload to finish, but since nothing was being uploaded, I changed my mind and chose “Stop immediately” and got this:

- I think after I clicked OK, it either started stopping the process anyway or I tried stopping it again, this time without an error message (I think it was the latter)

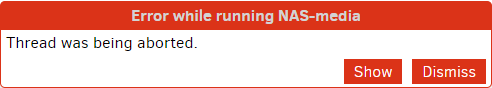

- It then gave me this “Thread was being aborted” error:

I have no idea what that means and whether “was being aborted” means that it crashed/aborted itself or whether I aborted it (which should not produce an error message, because I requested the abortion and if there has to be an error message it should at least be clear that this was a user requested abortion). - When I clicked show, it showed me this:

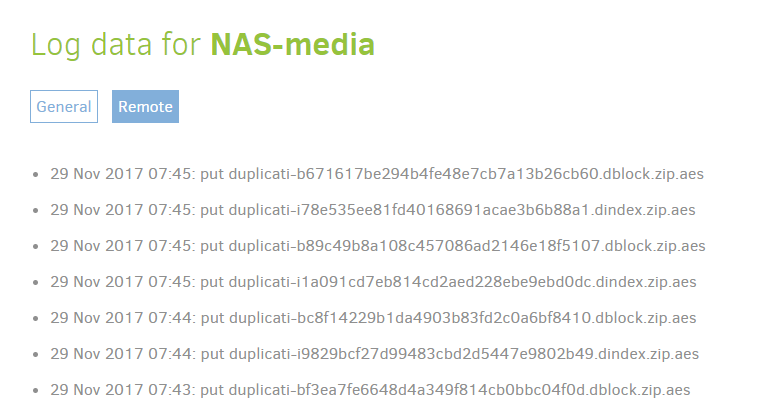

- When I clicked on General, I get some information for the first time:

This seems to indicate that the upload stopped working this morning at 7:45. That is more than 12 hours ago but duplicati did not seem to see any problem. At least it did not provide any warning or anything. And I still have no idea why the upload stopped. - Now I can also find some new entries in the general log (the one’s I checked in point 1 above):

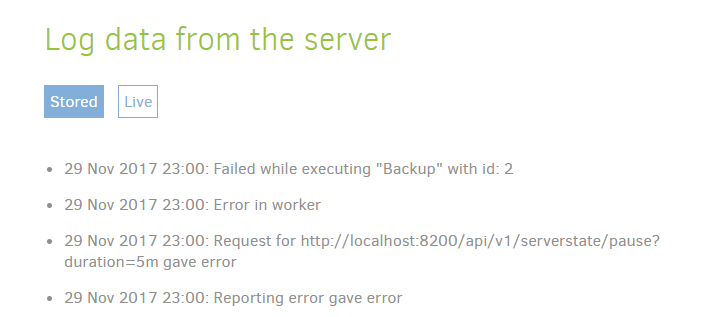

Here are the details:

29 Nov 2017 23:00: Failed while executing "Backup" with id: 2

System.Threading.ThreadAbortException: Thread was being aborted.

at Duplicati.Library.Main.Operation.BackupHandler.HandleFilesystemEntry(ISnapshotService snapshot, BackendManager backend, String path, FileAttributes attributes)

at Duplicati.Library.Main.Operation.BackupHandler.RunMainOperation(ISnapshotService snapshot, BackendManager backend)

at Duplicati.Library.Main.Operation.BackupHandler.Run(String[] sources, IFilter filter)

at Duplicati.Library.Main.Controller.<>c__DisplayClass16_0.<Backup>b__0(BackupResults result)

at Duplicati.Library.Main.Controller.RunAction[T](T result, String[]& paths, IFilter& filter, Action`1 method)

at Duplicati.Library.Main.Controller.Backup(String[] inputsources, IFilter filter)

at Duplicati.Server.Runner.Run(IRunnerData data, Boolean fromQueue)

29 Nov 2017 23:00: Error in worker

System.Threading.ThreadAbortException: Thread was being aborted.

at Duplicati.Server.Runner.Run(IRunnerData data, Boolean fromQueue)

at Duplicati.Library.Utility.WorkerThread`1.Runner()

29 Nov 2017 23:00: Request for http://localhost:8200/api/v1/serverstate/pause?duration=5m gave error

System.InvalidOperationException: Cannot add stuff to HttpInput.Empty.

at HttpServer.HttpInput.Add(String name, String value)

at Duplicati.Server.WebServer.RESTHandler.DoProcess(RequestInfo info, String method, String module, String key)

29 Nov 2017 23:00: Reporting error gave error

System.ObjectDisposedException: Cannot write to a closed TextWriter.

at System.IO.__Error.WriterClosed()

at System.IO.StreamWriter.Flush(Boolean flushStream, Boolean flushEncoder)

at Duplicati.Server.WebServer.RESTHandler.DoProcess(RequestInfo info, String method, String module, String key)

It would be great if duplicati could become a bit more talkative regarding failing backups.

BTW: the next backup job in the queue automatically started as scheduled and is uploading fine at the moment.