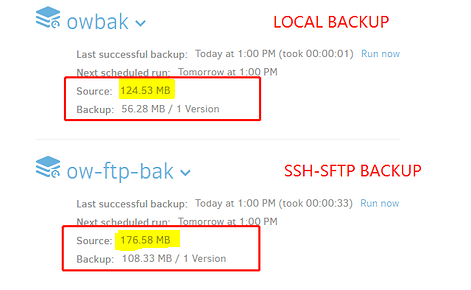

The same backup directory, backup to local and backup to SFTP, more 50+M, why?

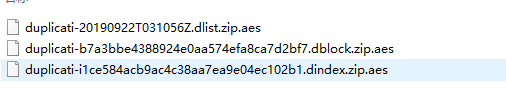

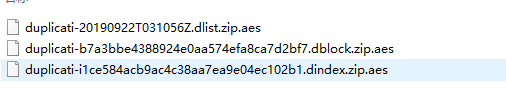

local files

You are currently running Duplicati - 2.0.4.23_beta_2019-07-14

The same backup directory, backup to local and backup to SFTP, more 50+M, why?

local files

You are currently running Duplicati - 2.0.4.23_beta_2019-07-14

There is something different about the source folders for these two backup jobs… One is larger than the other, which is probably what makes its backup larger.

The source directory of the two backup tasks is the same, so I have questions, why is the remote backup more than 50M?

The sources are not the same, or at a minimum the file sizes in the source changed between the time you did one backup and the other.

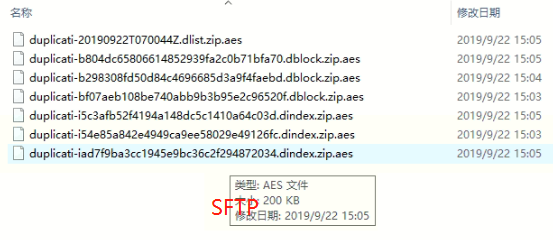

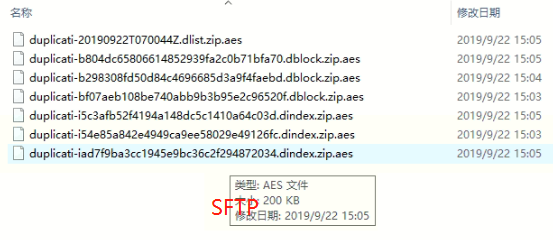

See highlighted numbers:

If the source was 100% identical then the backup size would be the same, too.

Are you sure you didn’t exclude anything in the local backup (hidden, temp or system files, files larger than x)?

I saw that there are 2 dblock.zip and dindex.zip files (about 50M) in the folder backed up by SFTP mode. Their modification time is within one minute, but I only performed a backup manually, I use The local backup mode file is more than 50 M, so I think the program is backed up repeatedly. I just tested it for this task, so I didn’t change any files during the backup process!

I excluded .git and .idea, but I added the exclude folder options .git and .idea for both tasks, and I determined that the parameters for creating the task are the same.

I remember, I think I chose the same way to exclude, but I just checked out the exclusion of git and only chose to exclude files and did not choose to exclude folders, this may be my mistake, sorry!

In addition, I would like to ask, can’t use Duplicati in Linux system without interface?

Those dblock.zip and dindex.zip files are backup files of Duplicati itself. If you choose to backup your data to the source folder itself, I’m not shocked that the source folder’s size grows. I guess the SSH-SFTP Backup is making a backup of the Local backup job’s backup files.