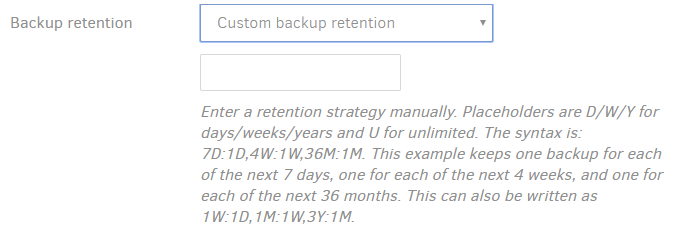

That’s correct. You’d need different jobs, and I can’t comment much on what you want to put in each, but everybody is suggesting custom retention, and I’m not seeing any sign that you’re considering it. You can keep a version for unlimited time without keeping ALL versions. It’s a progressive thinning, announced as:

New retention policy deletes old backups in a smart way and because manual hasn’t caught up, done as:

See earlier post for a specific string and how to interpret it, Also see my caveat on things falling into a gap.

I think it’s the same as unticking, meaning it’s removed from source data on new backups but not purged from old backups. To do that you need to purge manually. You may face some challenges on splitting the existing backup. While you can purge files, I don’t think there’s a way to move the entire history of the files into a separate backup, and deduplication isn’t done across different backup jobs, so space use goes up.