I will look at this specifically blocksize. I want to reduce the number of dup-* files on my remote backend. The source data is approximately 150GB (this is a test run for my bigger multi-TB backup). I also have a local drive that I backup to daily and the sqlite database for this same data locally is 400+MB, so definitely worth looking into. Thanks for this info.

I did this previously and received the same results. I did it again just now and here are some screenshots:

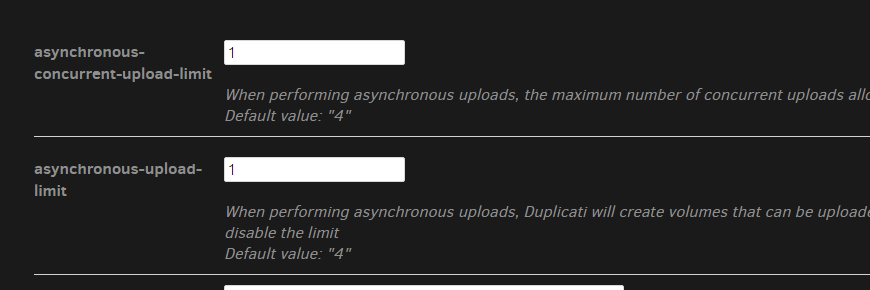

Job settings:

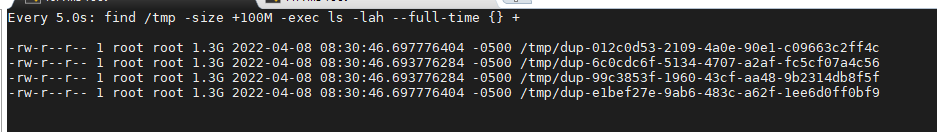

Temp dir in docker container: (I also included the --full-time parameter on this one)

All these files are created after I kick off the job, and these are the same dup-* files that get uploaded eventually to the remote backend.

I also want to point out this thread here of a person trying to achieve the same thing: Limit dup- files generated count during backup

I did not report the original issue, but the latest comment on there is mine, but since I’ve seen other threads where people are successful with this option, I thought I’d reach out here to see if I’m doing something wrong.

I am encrypting these backups, so not sure if one is the unencrypted archive and the other is the encrypted copy, but getting 4 files at the same time still wouldn’t make sense since it would need to create the archive completely before encryption.

Additionally, this docker container image has dd and od on it, but I am not sure the correct command to run to check the bytes on each file